[ad_1]

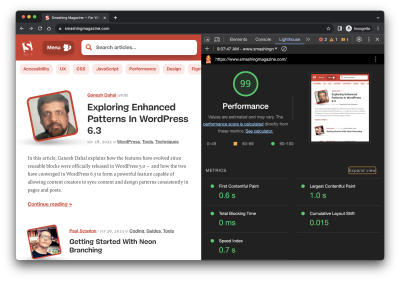

Operating a efficiency test for your website online isn’t too extraordinarily tough. It’ll also be one thing you do incessantly with Lighthouse in Chrome DevTools, the place trying out is freely to be had and produces an overly attractive-looking file.

Lighthouse is just one efficiency auditing device out of many. The benefit of getting it tucked into Chrome DevTools is what makes it a very easy go-to for plenty of builders.

However are you aware how Lighthouse calculates efficiency metrics like First Contentful Paint (FCP), Overall Blockading Time (TBT), and Cumulative Structure Shift (CLS)? There’s a at hand calculator connected up within the file abstract that permits you to alter efficiency values to peer how they affect the total ranking. Nonetheless, there’s not anything in there to let us know in regards to the information Lighthouse is the use of to judge metrics. The linked-up explainer supplies extra main points, from how ratings are weighted to why ratings would possibly range between take a look at runs.

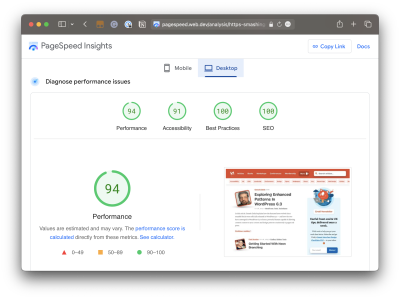

Why do we want Lighthouse in any respect when Google additionally provides equivalent experiences in PageSpeed Insights (PSI)? Actually that the 2 gear have been somewhat distinct till PSI was once up to date in 2018 to make use of Lighthouse reporting.

Did you understand that the Efficiency ranking in Lighthouse isn’t like that PSI screenshot? How can one file lead to a near-perfect ranking whilst the opposite seems to search out extra causes to decrease the ranking? Shouldn’t they be the similar if each experiences depend at the identical underlying tooling to generate ratings?

That’s what this newsletter is set. Other gear make other assumptions the use of other information, whether or not we’re speaking about Lighthouse, PageSpeed Insights, or business products and services like DebugBear. That’s what accounts for various effects. However there are extra particular causes for the divergence.

Let’s dig into the ones through answering a suite of commonplace questions that pop up right through efficiency audits.

What Does It Imply When PageSpeed Insights Says It Makes use of “Actual-Person Enjoy Information”?

It is a nice query as it supplies a large number of context for why it’s imaginable to get various effects from other efficiency auditing gear. In reality, after we say “genuine consumer information,” we’re truly referring to 2 various kinds of information. And when discussing the 2 varieties of information, we’re in fact speaking about what is known as real-user tracking, or RUM for brief.

Kind 1: Chrome Person Enjoy File (CrUX)

What PSI manner through “real-user revel in information” is that it evaluates the efficiency information used to measure the core internet vitals out of your assessments towards the core internet vitals information of exact real-life customers. That real-life information is pulled from the Chrome Person Enjoy (CrUX) file, a suite of anonymized information gathered from Chrome customers — a minimum of those that have consented to percentage information.

CrUX information is necessary as a result of it’s how internet core vitals are measured, which, in flip, are a score issue for Google’s seek effects. Google specializes in the seventy fifth percentile of customers within the CrUX information when reporting core internet vitals metrics. This manner, the knowledge represents a overwhelming majority of customers whilst minimizing the potential of outlier stories.

But it surely comes with caveats. For instance, the knowledge is lovely gradual to replace, refreshing each 28 days, which means it’s not the similar as real-time tracking. On the identical time, should you plan on the use of the knowledge your self, you could in finding your self restricted to reporting inside of that floating 28-day vary except you are making use of the CrUX Historical past API or BigQuery to provide historic effects you’ll measure towards. CrUX is what fuels PSI and Google Seek Console, however it’s also to be had in different gear you could already use.

Barry Pollard, a internet efficiency developer recommend for Chrome, wrote a very good primer at the CrUX File for Smashing Mag.

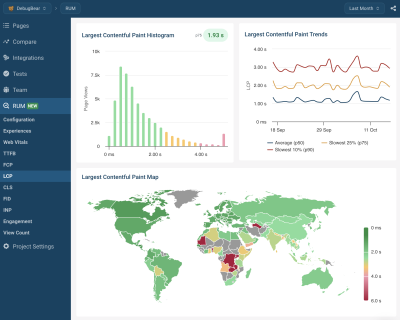

Kind 2: Complete Actual-Person Tracking (RUM)

If CrUX provides one taste of real-user information, then we will believe “complete real-user information” to be some other taste that gives much more in the best way person stories, equivalent to particular community requests made through the web page. This information is distinct from CrUX as it’s gathered without delay through the web page proprietor through putting in an analytics snippet on their web page.

In contrast to CrUX information, complete RUM pulls information from different customers the use of different browsers along with Chrome and does so on a continuous foundation. That implies there’s no ready 28 days for a contemporary set of information to peer the affect of any adjustments made to a website online.

You’ll be able to see how you could finally end up with other leads to efficiency assessments just by the kind of real-user tracking (RUM) this is in use. Each sorts are helpful, however

Does Lighthouse Use RUM Information, Too?

It does no longer! It makes use of artificial information, or what we repeatedly name lab information. And, identical to RUM, we will provide an explanation for the concept that of lab information through breaking it up into two differing types.

Kind 1: Noticed Information

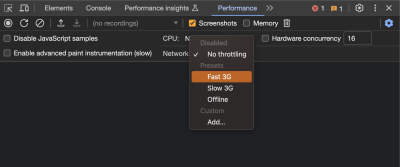

Noticed information is efficiency because the browser sees it. So, as a substitute tracking genuine knowledge gathered from genuine customers, seen information is extra like defining the take a look at stipulations ourselves. For instance, shall we upload throttling to the take a look at atmosphere to put in force a man-made situation the place the take a look at opens the web page on a slower connection. Chances are you’ll call to mind it like racing a automobile in digital truth, the place the stipulations are determined upfront, fairly than racing on a reside observe the place stipulations would possibly range.

Kind 2: Simulated Information

Whilst we known as that final form of information “seen information,” that isn’t an legit trade time period or anything else. It’s extra of a important label to assist distinguish it from simulated information, which describes how Lighthouse (and lots of different gear that come with Lighthouse in its function set, equivalent to PSI) applies throttling to a take a look at atmosphere and the effects it produces.

The cause of the dignity is that there are other ways to throttle a community for trying out. Simulated throttling begins through gathering information on a quick web connection, then estimates how briefly the web page would have loaded on a unique connection. The result’s a far sooner take a look at than it might be to use throttling earlier than gathering knowledge. Lighthouse can frequently seize the effects and calculate its estimates sooner than the time it might take to collect the guidelines and parse it on an artificially slower connection.

Simulated And Noticed Information In Lighthouse

Simulated information is the knowledge that Lighthouse makes use of through default for efficiency reporting. It’s additionally what PageSpeed Insights makes use of since it’s powered through Lighthouse beneath the hood, despite the fact that PageSpeed Insights additionally will depend on real-user revel in information from the CrUX file.

Then again, it’s also imaginable to assemble seen information with Lighthouse. This information is extra dependable because it doesn’t rely on an incomplete simulation of Chrome internals and the community stack. The accuracy of seen information relies on how the take a look at atmosphere is ready up. If throttling is carried out on the working machine point, then the metrics fit what an actual consumer with the ones community stipulations would revel in. DevTools throttling is more uncomplicated to arrange, however doesn’t appropriately replicate how server connections paintings at the community.

Boundaries Of Lab Information

Lab information is essentially restricted through the truth that it best seems to be at a unmarried revel in in a pre-defined atmosphere. This atmosphere frequently doesn’t even fit the common genuine consumer at the web page, who could have a sooner community connection or a slower CPU. Steady real-user tracking can in fact let you know how customers are experiencing your web page and whether or not it’s rapid sufficient.

So why use lab information in any respect?

Google CrUX information best experiences metric values without a debug information telling you find out how to give a boost to your metrics. By contrast, lab experiences comprise a large number of research and tips on find out how to give a boost to your web page velocity.

Why Is My Lighthouse LCP Rating Worse Than The Actual Person Information?

It’s a bit of more uncomplicated to provide an explanation for other ratings now that we’re conversant in the various kinds of information utilized by efficiency auditing gear. We now know that Google experiences at the seventy fifth percentile of genuine customers when reporting internet core vitals, which contains LCP.

“By means of the use of the seventy fifth percentile, we all know that almost all visits to the website online (3 of four) skilled the objective point of efficiency or higher. Moreover, the seventy fifth percentile price is much less more likely to be suffering from outliers. Returning to our instance, for a website online with 100 visits, 25 of the ones visits would want to file huge outlier samples for the worth on the seventy fifth percentile to be suffering from outliers. Whilst 25 of 100 samples being outliers is imaginable, it’s a lot much less most likely than for the ninety fifth percentile case.”

At the turn facet, simulated information from Lighthouse neither experiences on genuine customers nor accounts for outlier stories in the similar means that CrUX does. So, if we have been to set heavy throttling at the CPU or community of a take a look at atmosphere in Lighthouse, we’re in fact embracing outlier stories that CrUX may in a different way toss out. As a result of Lighthouse applies heavy throttling through default, the result’s that we get a worse LCP ranking in Lighthouse than we do PSI just because Lighthouse’s information successfully seems to be at a gradual outlier revel in.

Why Is My Lighthouse CLS Rating Higher Than The Actual Person Information?

Simply so we’re at the identical web page, Cumulative Structure Shift (CLS) measures the “visual steadiness” of a web page structure. In the event you’ve ever visited a web page, scrolled down it a bit of earlier than the web page has absolutely loaded, after which spotted that your home at the web page shifts when the web page load is entire, then you understand precisely what CLS is and the way it feels.

The nuance right here has to do with web page interactions. We all know that genuine customers are in a position to interacting with a web page even earlier than it has absolutely loaded. It is a giant deal when measuring CLS as a result of structure shifts frequently happen decrease at the web page after a consumer has scrolled down the web page. CrUX information is perfect right here as it’s in line with genuine customers who would do this kind of factor and undergo the worst results of CLS.

Lighthouse’s simulated information, in the meantime, does no such factor. It waits patiently for the entire web page load and not interacts with portions of the web page. It doesn’t scroll, click on, faucet, hover, or engage in any respect.

For this reason you’re much more likely to obtain a decrease CLS ranking in a PSI file than you’d get in Lighthouse. It’s no longer that PSI likes you much less, however that the actual customers in its file are a greater mirrored image of the way customers engage with a web page and are much more likely to revel in CLS than simulated lab information.

Why Is Interplay to Subsequent Paint Lacking In My Lighthouse File?

That is some other case the place it’s useful to understand the various kinds of information utilized in other gear and the way that information interacts — or no longer — with the web page. That’s for the reason that Interplay to Subsequent Paint (INP) metric is all about interactions. It’s proper there within the identify!

The truth that Lighthouse’s simulated lab information does no longer engage with the web page is a dealbreaker for an INP file. INP is a measure of the latency for all interactions on a given web page, the place the absolute best latency — or on the subject of it — informs the general ranking. For instance, if a consumer clicks on an accordion panel and it takes longer for the content material within the panel to render than some other interplay at the web page, that’s what will get used to judge INP.

So, when INP turns into an legit core internet vitals metric in March 2024, and also you understand that it’s no longer appearing up to your Lighthouse file, you’ll know precisely why it isn’t there.

Be aware: It’s imaginable to script consumer flows with Lighthouse, together with in DevTools. However that most definitely is going too deep for this newsletter.

Why Is My Time To First Byte Rating Worse For Actual Customers?

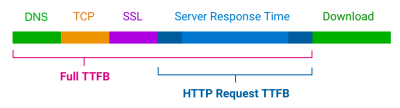

The Time to First Byte (TTFB) is what in an instant involves thoughts for many people when fascinated by web page velocity efficiency. We’re speaking in regards to the time between setting up a server connection and receiving the primary byte of information to render a web page.

TTFB identifies how briskly or gradual a internet server is to reply to requests. What makes it particular within the context of core internet vitals — despite the fact that it’s not thought to be a core internet necessary itself — is that it precedes all different metrics. The internet server wishes to determine a connection as a way to obtain the primary byte of information and render the whole thing else that core internet vitals metrics measure. TTFB is basically an indication of the way rapid customers can navigate, and core internet vitals can’t occur with out it.

Chances are you’ll already see the place that is going. After we get started speaking about server connections, there are going to be variations between the best way that RUM information observes the TTFB as opposed to how lab information approaches it. Because of this, we’re certain to get other ratings in line with which efficiency gear we’re the use of and during which atmosphere they’re. As such, TTFB is extra of a “tough information,” as Jeremy Wagner and Barry Pollard provide an explanation for:

“Web pages range in how they ship content material. A low TTFB is an important for purchasing markup out to the customer once imaginable. Then again, if a web page delivers the preliminary markup briefly, however that markup then calls for JavaScript to populate it with significant content material […], then attaining the bottom imaginable TTFB is particularly necessary in order that the client-rendering of markup can happen faster. […] For this reason the TTFB thresholds are a “tough information” and can want to be weighed towards how your website online delivers its core content material.”

So, in case your TTFB ranking is available in upper when the use of a device that will depend on RUM information than the ranking you obtain from Lighthouse’s lab information, it’s most definitely on account of caches being hit when trying out a specific web page. Or in all probability the actual consumer is coming in from a shortened URL that redirects them earlier than connecting to the server. It’s even imaginable that an actual consumer is connecting from a spot this is truly a long way out of your internet server, which takes a bit of time beyond regulation, in particular should you’re no longer the use of a CDN or working edge purposes. It truly relies on each the consumer and the way you serve information.

This newsletter has already presented one of the crucial nuances concerned when gathering internet vitals information. Other gear and information resources frequently file other metric values. So which of them are you able to consider?

When operating with lab information, I recommend who prefer seen information over simulated information. However you’ll see variations even between gear that every one ship top quality information. That’s as a result of no two assessments are the similar, with other take a look at places, CPU speeds, or Chrome variations. There’s no person proper price. As an alternative, you’ll use the lab information to spot optimizations and spot how your web page adjustments through the years when examined in a constant atmosphere.

In the end, what you wish to have to have a look at is how genuine customers revel in your web page. From an search engine optimization viewpoint, the 28-day Google CrUX information is the gold same old. Then again, it gained’t be correct should you’ve rolled out efficiency enhancements over the previous few weeks. Google additionally doesn’t file CrUX information for some high-traffic pages for the reason that guests might not be logged in to their Google profile.

Putting in a customized RUM answer for your web page can resolve that factor, however the numbers gained’t fit CrUX precisely. That’s as a result of guests the use of browsers as opposed to Chrome at the moment are incorporated, as are customers with Chrome analytics reporting disabled.

In spite of everything, whilst Google specializes in the quickest 75% of stories, that doesn’t imply the seventy fifth percentile is the proper quantity to have a look at. Even with just right core internet vitals, 25% of holiday makers would possibly nonetheless have a gradual revel in for your web page.

Wrapping Up

This has been an in depth have a look at how other efficiency gear audit and file on efficiency metrics, equivalent to core internet vitals. Other gear depend on various kinds of information which can be in a position to generating other effects when measuring other efficiency metrics.

So, if you end up with a CLS ranking in Lighthouse this is a long way not up to what you get in PSI or DebugBear, pass with the Lighthouse file as it makes you glance higher to the large boss. Simply kidding! That distinction is a large clue that the knowledge between the 2 gear is asymmetric, and you’ll use that knowledge to assist diagnose and fasten efficiency problems.

Are you in search of a device to trace lab information, Google CrUX information, and whole real-user tracking information? DebugBear is helping you stay observe of all 3 varieties of information in a single position and optimize your web page velocity the place it counts.

(yk)

[ad_2]