[ad_1]

Welcome again to this sequence the place we’re construction internet packages with AI tooling.

- Intro & Setup

- Your First AI Advised

- Streaming Responses

- How Does AI Paintings

- Advised Engineering

- AI-Generated Photographs

- Safety & Reliability

- Deploying

In the former publish, we were given AI generated jokes into our Qwik utility from OpenAI API. It labored, however the consumer revel in suffered as a result of we needed to wait till the API finished all the reaction ahead of updating the shopper.

A greater revel in, as you’ll know for those who’ve used any AI chat gear, is to reply once each and every little bit of textual content is generated. It turns into a type of teletype impact.

That’s what we’re going to construct as of late the usage of HTTP streams.

Must haves

Earlier than we get into streams, we want to discover one thing with a Qwik quirk associated with HTTP requests.

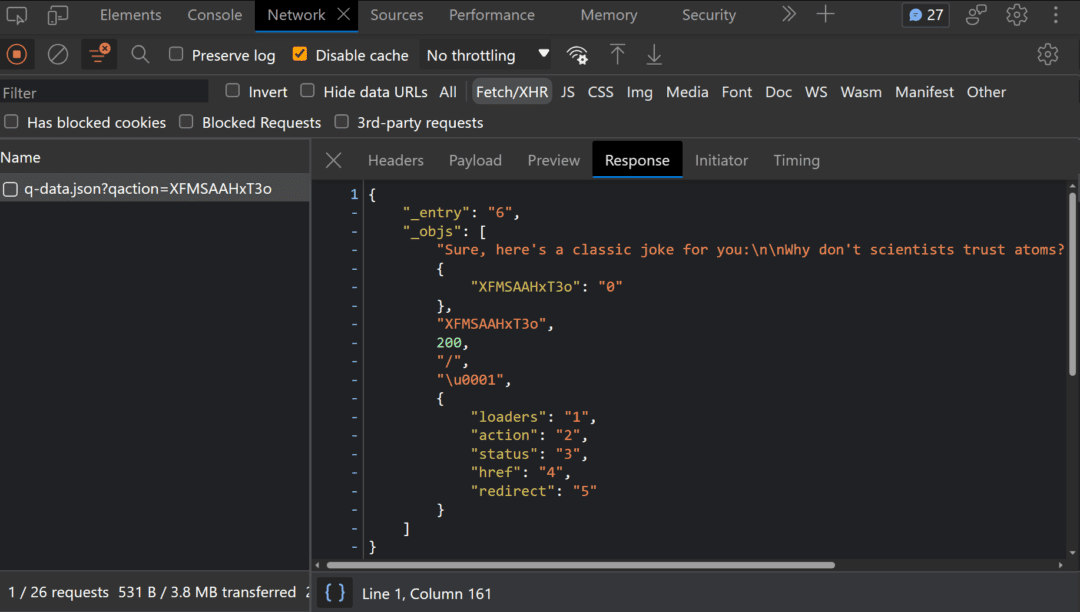

If we read about the present POST request being despatched by means of the shape, we will be able to see that the returned payload isn’t simply the apparent textual content we returned from our motion handler. As an alternative, it’s this kind of serialized information.

That is the results of how the Qwik Optimizer lazy quite a bit belongings and is vital to correctly deal with the information because it comes again. Sadly, this prevents same old streaming responses.

So whilst routeAction$ and the Shape element are tremendous at hand, we’ll need to do one thing else.

To their credit score, the Qwik staff does supply a well-documented way for streaming responses. On the other hand, it comes to their server$ serve as and async generator purposes. This may most likely be the precise way if we’re speaking strictly about Qwik, however this sequence is for everybody. I’ll steer clear of this implementation, because it’s too explicit to Qwik, and concentrate on widely acceptable ideas as an alternative.

Refactor Server Common sense

It sucks that we will be able to’t use direction movements as a result of they’re nice. So what are we able to use?

Qwik Town provides a couple of choices. The most productive I discovered is middleware. They supply sufficient get right of entry to to primitive gear that we will be able to accomplish what we’d like, and the ideas will follow to different contexts but even so Qwik.

Middleware is largely a collection of purposes that we will be able to inject at quite a lot of issues throughout the request lifecycle of our direction handler. We will outline them by means of exporting named constants for the hooks we need to goal (onRequest, onGet, onPost, onPut, onDelete).

So as an alternative of depending on a direction motion, we will be able to use a middleware that hooks into any POST request by means of exporting an onPost middleware. In an effort to give a boost to streaming, we’ll need to go back a regular Reaction object. We will achieve this by means of making a Reaction object and passing it to the requestEvent.ship() means.

Right here’s a fundamental (non-streaming) instance:

/** @sort {import('@builder.io/qwik-city').RequestHandler} */

export const onPost = (requestEvent) => {

requestEvent.ship(new Reaction('Hi Squirrel!'))

}Earlier than we take on streaming, let’s get the similar capability from the previous direction motion carried out with middleware. We will replica many of the code into the onPost middleware, however we gained’t have get right of entry to to formData.

Thankfully, we will be able to recreate that information from the requestEvent.parseBody() means. We’ll additionally need to use requestEvent.ship() to reply with the OpenAI information as an alternative of a go back commentary.

/** @sort {import('@builder.io/qwik-city').RequestHandler} */

export const onPost = async (requestEvent) => {

const OPENAI_API_KEY = requestEvent.env.get('OPENAI_API_KEY')

const formData = wait for requestEvent.parseBody()

const steered = formData.steered

const frame = {

fashion: 'gpt-3.5-turbo',

messages: [{ role: 'user', content: prompt }]

}

const reaction = wait for fetch('https://api.openai.com/v1/chat/completions', {

// ... fetch choices

})

const information = wait for reaction.json()

const responseBody = information.alternatives[0].message.content material

requestEvent.ship(new Reaction(responseBody))

}Refactor Shopper Common sense

Changing the direction movements has the unlucky aspect impact of which means we may’t use the <Shape> element anymore. We’ll have to make use of a typical HTML <kind> part and recreate the entire advantages we had ahead of, together with sending HTTP request with JavaScript, monitoring the loading state, and getting access to the effects. Let’s refactor our client-side to give a boost to the ones options once more.

We will damage those necessities all the way down to desiring two issues, a JavaScript answer for filing paperwork and a reactive state for managing loading states and effects.

I’ve lined filing HTML paperwork with JavaScript extensive a number of occasions previously:

So as of late I’ll simply proportion the snippet, which I put inside of a utils.js document within the root of my undertaking. This jsFormSubmit serve as accepts an HTMLFormElement then constructs a fetch request in line with the shape attributes and returns the ensuing Promise:

/**

* @param {HTMLFormElement} kind

*/

export serve as jsFormSubmit(kind) {

const url = new URL(kind.motion)

const formData = new FormData(kind)

const searchParameters = new URLSearchParams(formData)

/** @sort {Parameters<typeof fetch>[1]} */

const fetchOptions = {

means: kind.means

}

if (kind.means.toLowerCase() === 'publish') {

fetchOptions.frame = kind.enctype === 'multipart/form-data' ? formData : searchParameters

} else {

url.seek = searchParameters

}

go back fetch(url, fetchOptions)

}This generic serve as can be utilized to put up any HTML kind, so it’s at hand to make use of in a put up match handler. Candy!

As for the reactive information, Qwik supplies two choices, useStore and useSignal. I favor useStore, which permits us to create an object whose houses are reactive. Which means adjustments to the thing’s houses will robotically be mirrored anyplace they’re referenced within the UI.

We will use useStore to create a “state” object in our element to trace the loading state of the HTTP request in addition to the textual content reaction.

import { $, element$, useStore } from "@builder.io/qwik";

// different setup common sense

export default element$(() => {

const state = useStore({

isLoading: false,

textual content: '',

})

// different element common sense

})Subsequent, we will be able to replace the template. Since we will be able to now not use the motion object we had ahead of, we will be able to change references from motion.isRunning and motion.worth to state.isLoading and state.textual content, respectively (don’t inquire from me why I modified the valuables names 🤷♂️). I’ll additionally upload a “put up” match handler to the shape referred to as handleSbumit, which we’ll take a look at in a while.

<major>

<kind

means="publish"

preventdefault:put up

onSubmit$={handleSubmit}

>

<div>

<label for="steered">Advised</label>

<textarea title="steered" identity="steered">

Inform me a funny story

</textarea>

</div>

<button sort="put up" aria-disabled={state.isLoading}>

{state.isLoading ? 'One sec...' : 'Inform me'}

</button>

</kind>

{state.textual content && (

<article>

<p>{state.textual content}</p>

</article>

)}

</major>Observe that the <kind> does now not explicitly supply an motion characteristic. Through default, an HTML kind will put up information to the present URL, so we handiest want to set the means to POST and put up this manner to cause the onPost middleware we outlined previous.

Now, the final step to get this refactor operating is defining handleSubmit. Similar to we did in the former publish, we want to wrap an match handler inside of Qwik’s $ serve as.

Throughout the match handler, we’ll need to filter out any earlier information from state.textual content, set state.isLoading to true, then move the shape’s DOM node to our fancy jsFormSubmit serve as. This must put up the HTTP request for us. As soon as it comes again, we will be able to replace state.textual content with the reaction frame, and go back state.isLoading to false.

const handleSubmit = $(async (match) => {

state.textual content=""

state.isLoading = true

/** @sort {HTMLFormElement} */

const kind = match.goal

const reaction = wait for jsFormSubmit(kind)

state.textual content = wait for reaction.textual content()

state.isLoading = false

})OK! We must now have a client-side kind that makes use of JavaScript to put up an HTTP request to the server whilst monitoring the loading and reaction states, and updating the UI accordingly.

That used to be numerous paintings to get the similar answer we had ahead of however with fewer options. BUT the important thing get advantages is we’ve got direct get right of entry to to the platform primitives we want to give a boost to streaming.

Allow Streaming at the Server

Earlier than we begin streaming responses from OpenAI, I believe it’s useful first of all an excessively fundamental instance to get a greater take hold of of streams. Streams let us ship small chunks of knowledge through the years. So for example, let’s print out some iconic David Bowie lyrics in pace with the music, “Area Oddity“.

Once we assemble our Reaction object, as an alternative of passing undeniable textual content, we’ll need to move a circulation. We’ll create the circulation in a while, however right here’s the speculation:

/** @sort {import('@builder.io/qwik-city').RequestHandler} */

export const onPost = (requestEvent) => {

requestEvent.ship(new Reaction(circulation))

}We’ll create an excessively rudimentary ReadableStream the usage of the ReadableStream constructor and move it an non-compulsory parameter. This non-compulsory parameter will also be an object with a get started means that’s referred to as when the circulation is built.

The beginning means is chargeable for the steam’s common sense and has get right of entry to to the circulation controller, which is used to ship information and shut the circulation.

const circulation = new ReadableStream({

get started(controller) {

// Move common sense is going right here

}

})OK, let’s plan out that common sense. We’ll have an array of music lyrics and a serve as to ‘sing’ them (move them to the circulation). The sing serve as will take the primary merchandise within the array and move that to the circulation the usage of the controller.enqueue() means. If it’s the final lyric within the record, we will be able to shut the circulation with controller.shut(). Differently, the sing means can name itself once more after a brief pause.

const circulation = new ReadableStream({

get started(controller) {

const lyrics = ['Ground', ' control', ' to major', ' Tom.']

serve as sing() {

const lyric = lyrics.shift()

controller.enqueue(lyric)

if (lyrics.duration < 1) {

controller.shut()

} else {

setTimeout(sing, 1000)

}

}

sing()

}

})So each and every 2nd, for 4 seconds, this circulation will ship out the lyrics “Flooring regulate to primary Tom.” Slick!

As a result of this circulation will likely be used within the frame of the Reaction, the relationship will stay open for 4 seconds till the reaction completes. However the frontend could have get right of entry to to each and every bite of knowledge because it arrives, somewhat than ready the total 4 seconds.

This doesn’t accelerate the overall reaction time (in some instances, streams can building up reaction occasions), however it does permit for a quicker perceived reaction, and that makes a greater consumer revel in.

Right here’s what my code looks as if:

/** @sort {import('@builder.io/qwik-city').RequestHandler} */

export const onPost: RequestHandler = async (requestEvent) => {

const circulation = new ReadableStream({

get started(controller) {

const lyrics = ['Ground', ' control', ' to major', ' Tom.']

serve as sing() {

const lyric = lyrics.shift()

controller.enqueue(lyric)

if (lyrics.duration < 1) {

controller.shut()

} else {

setTimeout(sing, 1000)

}

}

sing()

}

})

requestEvent.ship(new Reaction(circulation))

}Sadly, because it stands presently, the shopper will nonetheless be ready 4 seconds ahead of seeing all the reaction, and that’s as a result of we weren’t anticipating a streamed reaction.

Let’s repair that.

Allow Streaming at the Shopper

Even if coping with streams, the default browser habits when receiving a reaction is to look ahead to it to finish. In an effort to get the habits we would like, we’ll want to use client-side JavaScript to make the request and procedure the streaming frame of the reaction.

We’ve already tackled that first phase inside of our handleSubmit serve as. Let’s get started processing that reaction frame.

We will get right of entry to the ReadableStream from the reaction frame’s getReader() means. This circulation could have its personal learn() means that we will be able to use to get right of entry to the following bite of knowledge, in addition to the guidelines if the reaction is finished streaming or now not.

The one ‘gotcha’ is that the information in each and every bite doesn’t are available as textual content, it is available in as a Uint8Array, which is “an array of 8-bit unsigned integers.” It’s mainly the illustration of the binary information, and also you don’t actually want to perceive any deeper than that until you wish to have to sound highly intelligent at a birthday party (or uninteresting).

The essential factor to grasp is that on their very own, those information chunks aren’t very helpful. To get one thing we can use, we’ll want to decode each and every bite of knowledge the usage of a TextDecoder.

Adequate, that’s numerous principle. Let’s damage down the common sense after which take a look at some code.

Once we get the reaction again, we want to:

- Seize the reader from the reaction frame the usage of

reaction.frame.getReader() - Setup a decoder the usage of

TextDecoderand a variable to trace the streaming standing. - Procedure each and every bite till the circulation is entire, with a

whilstloop that does this:- Seize the following bite’s information and circulation standing.

- Decode the information and use it to replace our app’s

state.textual content. - Replace the streaming standing variable, terminating the loop when entire.

- Replace the loading state of the app by means of surroundings

state.isLoadingtofalse.

The brand new handleSubmit serve as must glance one thing like this:

const handleSubmit = $(async (match) => {

state.textual content=""

state.isLoading = true

/** @sort {HTMLFormElement} */

const kind = match.goal

const reaction = wait for jsFormSubmit(kind)

// Parse streaming frame

const reader = reaction.frame.getReader()

const decoder = new TextDecoder()

let isStillStreaming = true

whilst(isStillStreaming) {

const {worth, performed} = wait for reader.learn()

const chunkValue = decoder.decode(worth)

state.textual content += chunkValue

isStillStreaming = !performed

}

state.isLoading = false

})Now, after I put up the shape, I see one thing like:

“Flooring

regulate

to primary

Tom.”

Hell yeah!!!

OK, many of the paintings is down. Now we simply want to change our demo circulation with the OpenAI reaction.

Move OpenAI Reaction

Having a look again at our authentic implementation, the very first thing we want to do is regulate the request to OpenAI to allow them to know that we would really like a streaming reaction. We will do this by means of surroundings the circulation assets within the fetch payload to true.

const frame = {

fashion: 'gpt-3.5-turbo',

messages: [{ role: 'user', content: prompt }],

circulation: true

}

const reaction = wait for fetch('https://api.openai.com/v1/chat/completions', {

means: 'publish',

headers: {

'Content material-Kind': 'utility/json',

Authorization: `Bearer ${OPENAI_API_KEY}`,

},

frame: JSON.stringify(frame)

})Subsequent, lets pipe the reaction from OpenAI at once to the shopper, however we would possibly now not need to do this. The knowledge they ship doesn’t actually align with that we need to ship to the shopper as it looks as if this (two chunks, one with information, and one representing the tip of the circulation):

information: {"identity":"chatcmpl-4bJZRnslkje3289REHFEH9ej2","object":"chat.final touch.bite","created":1690319476,"fashion":"gpt-3.5-turbo-0613","choiced":[{"index":0,"delta":{"content":"Because"},"finish_reason":"stop"}]}information: [DONE]As an alternative, what we’ll do is create our personal circulation, very similar to the David Bowie lyrics, that can do a little setup, enqueue chunks of knowledge into the circulation, and shut the circulation. Let’s get started with an overview:

const circulation = new ReadableStream({

async get started(controller) {

// Any setup ahead of streaming

// Ship chunks of knowledge

// Shut circulation

}

})Since we’re coping with a streaming fetch reaction from OpenAI, numerous the paintings we want to do right here can in truth be copied from the client-side circulation dealing with. This phase must glance acquainted:

const reader = reaction.frame.getReader()

const decoder = new TextDecoder()

let isStillStreaming = true

whilst(isStillStreaming) {

const {worth, performed} = wait for reader.learn()

const chunkValue = decoder.decode(worth)

// This is the place issues will likely be other

isStillStreaming = !performed

}This snippet used to be taken virtually at once from the frontend circulation processing instance. The one distinction is that we want to deal with the information coming from OpenAI somewhat another way. As we are saying, the chunks of knowledge they ship up will glance one thing like”information: [JSON data or done]“. Some other gotcha is that each and every every so often, they’ll in truth slip in TWO of those information strings in one streaming bite. So right here’s what I got here up with for processing the information.

- Create a Common Expression to seize the remainder of the string after “

information:“. - For the not going match there are a couple of information strings, use some time loop to procedure each and every fit within the string.

- If the present fits the ultimate situation (“

[DONE]“) shut the circulation. - Differently, parse the information as JSON and enqueue the primary piece of textual content from the record of choices (

json.alternatives[0].delta.content material). Fall again to an empty string if none is provide. - Finally, so as to transfer to the following fit, if there may be one, we will be able to use

RegExp.exec().

The common sense is relatively summary with out having a look at code, so right here’s what the entire circulation looks as if now:

const circulation = new ReadableStream({

async get started(controller) {

// Do paintings ahead of streaming

const reader = reaction.frame.getReader()

const decoder = new TextDecoder()

let isStillStreaming = true

whilst(isStillStreaming) {

const {worth, performed} = wait for reader.learn()

const chunkValue = decoder.decode(worth)

/**

* Captures any string after the textual content `information: `

* @see https://regex101.com/r/R4QgmZ/1

*/

const regex = /information:s*(.*)/g

let fit = regex.exec(chunkValue)

whilst (fit !== null) {

const payload = fit[1]

// Shut circulation

if (payload === '[DONE]') {

controller.shut()

} else

fit = regex.exec(chunkValue)

}

isStillStreaming = !performed

}

}

})Overview

That are supposed to be the whole thing we want to get streaming operating. With a bit of luck all of it is sensible and you were given it operating in your finish.

I believe it’s a good suggestion to check the waft to verify we’ve were given it:

- The consumer submits the shape, which will get intercepted and despatched with JavaScript. That is vital to procedure the circulation when it returns.

- The request is won by means of the motion handler which forwards the information to the OpenAI API at the side of the surroundings to go back the reaction as a circulation.

- The OpenAI reaction will likely be despatched again as a circulation of chunks, a few of which include JSON and the final one being “

[DONE]“. - As an alternative of passing the circulation to the motion reaction, we create a brand new circulation to make use of within the reaction.

- Within this circulation, we procedure each and every bite of knowledge from the OpenAI reaction and convert it to one thing extra helpful ahead of enqueuing it for the motion reaction circulation.

- When the OpenAI circulation closes, we additionally shut our motion circulation.

- The JavaScript handler at the Jstomer aspect may even procedure each and every bite of knowledge because it is available in and replace the UI accordingly.

Conclusion

The app is operating. It’s lovely cool. We lined numerous fascinating issues as of late. Streams are very robust, but additionally difficult and, particularly when operating inside Qwik, there are a few little gotchas. However as a result of we eager about low-level basics, those ideas must follow throughout any framework.

So long as you could have get right of entry to to the platform and primitives like streams, requests, and reaction gadgets then this must paintings. That’s the wonderful thing about basics.

I believe we were given an attractive first rate utility going now. The one downside is presently we’re the usage of a generic textual content enter and asking customers to fill in all the steered themselves. In reality, they are able to installed no matter they would like. We’ll need to repair that during a long run publish, however the following publish goes to step clear of code and concentrate on working out how the AI gear in truth paintings.

I am hoping you’ve been playing this sequence and are available again for the remainder of it.

- Intro & Setup

- Your First AI Advised

- Streaming Responses

- How Does AI Paintings

- Advised Engineering

- AI-Generated Photographs

- Safety & Reliability

- Deploying

Thanks such a lot for studying. Should you appreciated this text, and need to give a boost to me, the most productive tactics to take action are to proportion it, join my e-newsletter, and practice me on Twitter.

At the start printed on austingil.com.

[ad_2]