[ad_1]

This newsletter is Section 1 of Ampere Computing’s Accelerating the Cloud sequence.

Historically, deploying a internet utility has supposed operating massive, monolithic programs on x86-based servers in an organization’s endeavor datacenter. Transferring programs to the cloud gets rid of the wish to overprovision the datacenter since cloud assets will also be allotted in accordance with real-time calls for. On the identical time, the transfer to cloud has been synonymous with a shift to componentized programs (aka microservices). This manner lets in programs to simply scale out to probably 100,000s or tens of millions of customers.

By means of shifting to a cloud local manner, programs can run solely within the cloud and entirely exploit the original features of the cloud. As an example, with a disbursed structure, builders can scale out seamlessly through growing extra cases of an utility element quite than operating a bigger and bigger utility, similar to how some other utility server will also be added with out including some other database. Many main corporations (i.e. Netflix, Wikipedia, and others) have carried the disbursed structure to the following stage through breaking programs into person microservices. Doing so simplifies design, deployment, and cargo balancing at scale. See The Phoenix Undertaking for extra main points on breaking down monolithic programs and The Twelve Issue App for perfect practices when creating cloud local programs.

Hyperthreading Inefficiencies

Conventional x86 servers are constructed on general-purpose architectures that had been evolved basically for private computing platforms the place customers wanted with the intention to execute a variety of several types of desktop programs on the identical time on a unmarried CPU. On account of this pliability, the x86 structure implements complicated features and capability helpful for desktop programs, however which many cloud programs would not have. Then again, corporations operating programs on an x86-based cloud will have to nonetheless pay for those features even if they don’t use them.

To give a boost to usage, x86 processors make use of hyperthreading, enabling one core to run two threads. Whilst hyperthreading lets in extra of a core’s capability to be applied, it additionally lets in one thread to probably have an effect on the efficiency of the opposite when the core’s assets are overcommitted. Particularly, on every occasion those two threads contend for a similar assets, this will introduce vital and unpredictable latency to operations. It is rather tough to optimize an utility while you don’t know — and will’t keep an eye on — which utility it’s going to percentage a core with. Hyperthreading will also be considered looking to pay the expenses and watch a sports activities sport on the identical time. The expenses take longer to finish, and also you don’t in reality recognize the sport. It’s higher to split and isolate duties through finishing the expenses first, then concentrating at the sport, or splitting the duties between two other folks, one in all whom isn’t a soccer fan.

Hyperthreading additionally expands the applying’s safety assault floor for the reason that utility within the different thread may well be malware making an attempt a facet channel assault. Conserving programs in several threads remoted from each and every different introduces overhead and further latency on the processor stage.

Cloud Local Optimization

For better potency and straightforwardness of design, builders want cloud assets designed to successfully procedure their particular knowledge — no longer everybody else’s knowledge. To reach this, an effective cloud local platform hurries up the kinds of operations standard of cloud local programs. To extend general efficiency, as a substitute of creating larger cores that require hyperthreading to execute more and more advanced desktop programs, cloud local processors supply extra cores designed to optimize execution of microservices. This ends up in extra constant and deterministic latency, permits clear scaling, and avoids most of the safety problems that rise up with hyperthreading since programs are naturally remoted once they run on their very own core.

To boost up cloud local programs, Ampere has evolved the Altra and Altra Max 64-bit cloud local processors. Providing extraordinary density with as much as 128 cores on a unmarried IC, a unmarried 1U chassis with two sockets can area as much as 256 cores in one rack.

Ampere Altra and Ampere Altra Max cores are designed across the Arm Instruction Set Structure (ISA). Whilst the x86 structure used to be to start with designed for general-purpose desktops, Arm has grown from a convention of embedded programs the place deterministic conduct and gear potency are extra of a focal point. Ranging from this basis, Ampere processors were designed particularly for programs the place energy and core density are necessary design issues. General, Ampere processors supply a particularly environment friendly basis for plenty of cloud local programs, leading to top efficiency with predictable and constant responsiveness blended with upper energy potency.

For builders, the truth that Ampere processors enforce the Arm ISA manner there’s already an in depth ecosystem of tool and gear to be had for construction. In Section 2 of this sequence, we’ll duvet how builders can seamlessly migrate their present programs to Ampere cloud local platforms introduced through main CSPs to instantly start accelerating their cloud operations.

The Cloud Local Merit

A key good thing about operating on a cloud local platform is decrease latency, resulting in extra constant and predictable efficiency. As an example, a microservices manner is basically other than present monolithic cloud programs. It shouldn’t be unexpected, then, that optimizing for high quality of provider and usage potency calls for a basically other manner as smartly.

Microservices spoil massive duties down into smaller parts. The merit is that as a result of microservices can specialize, they are able to ship better potency, reminiscent of attaining upper cache usage between operations in comparison to a extra generalized, monolithic utility looking to whole the entire vital duties. Then again, although microservices most often use fewer compute assets in line with element, latency necessities at each and every tier are a lot stricter than for an ordinary cloud utility. Put in a different way, each and every microservice handiest will get a small percentage of the latency funds to be had to the overall utility.

From an optimization perspective, predictable and constant latency is significant as a result of when the responsiveness of each and every microservice can range up to it does on a hyperthreaded x86 structure, the worst case latency is the sum of the worst case for each and every microservice blended. The excellent news is this additionally implies that even small enhancements in microservice latency can yield vital development when applied throughout a couple of microservices.

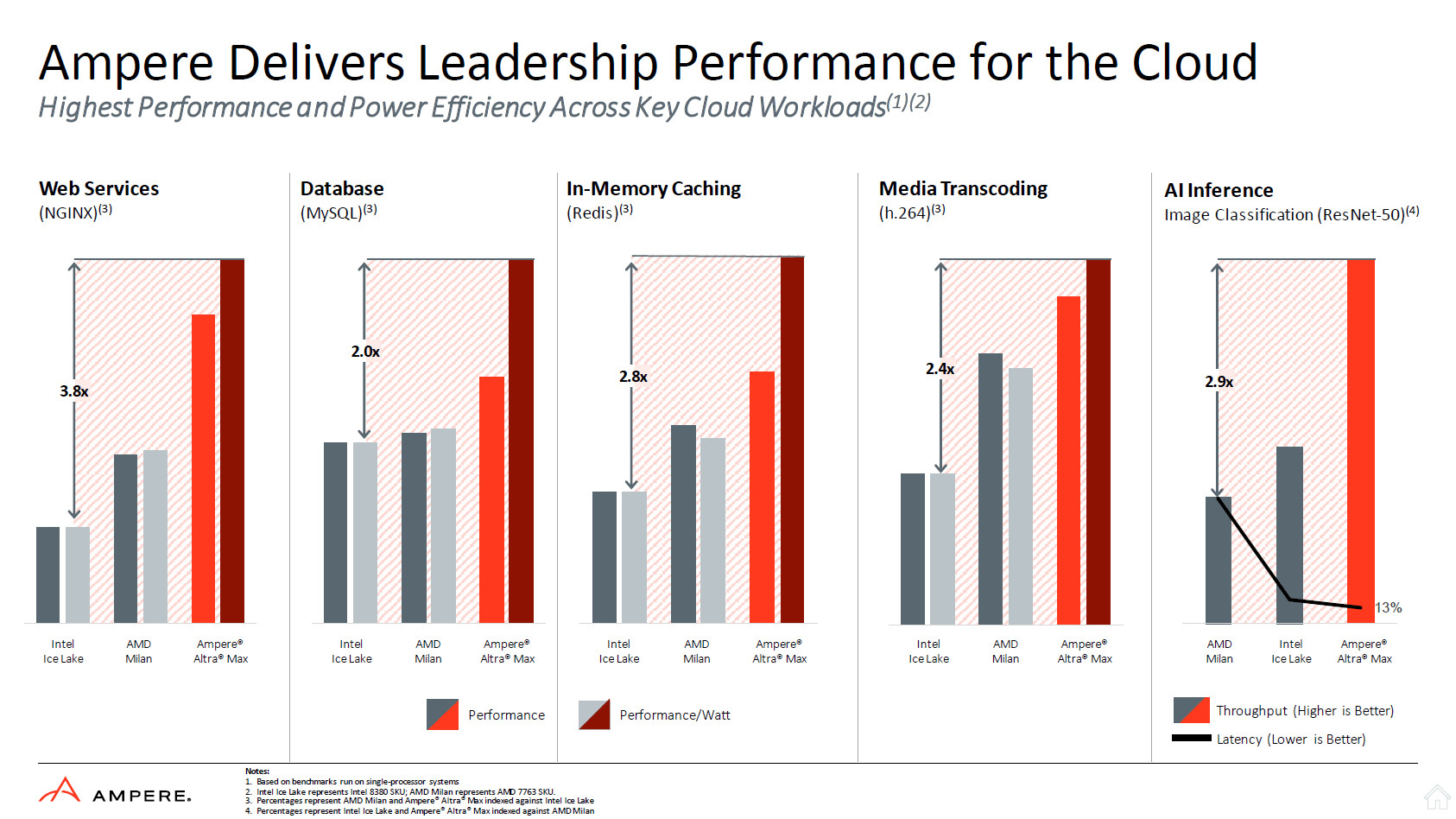

Determine 1 illustrates the efficiency advantages of operating standard cloud programs on a cloud local platform like Ampere Altra Max in comparison to Intel IceLake and AMD Milan. Ampere Altra Max delivers no longer handiest upper efficiency however even upper efficiency/watt potency. The determine additionally presentations how Ampere Altra Max has awesome latency — 13% of Intel IceLake — to give you the constant efficiency local cloud programs want.

Determine 1: A cloud local platform like Ampere Altra Max provides awesome efficiency, energy potency, and latency in comparison to Intel IceLake and AMD Milan.

Sustainability

Although it’s the CSP who’s chargeable for dealing with energy intake of their datacenter, many builders are mindful that the general public and corporate stakeholders are more and more inquisitive about how corporations are addressing sustainability. In 2022, cloud datacenters are estimated to have accounted for 80% of overall datacenter energy consumption1. In line with figures from 2019, datacenter energy intake is predicted to double through 2030.

It’s transparent sustainability is significant to long-term cloud enlargement and that the cloud business will have to start adopting extra energy environment friendly generation. Lowering energy intake can even result in operational financial savings. In the end, corporations that cleared the path through shrinking their carbon footprint lately might be ready when such measures develop into mandated.

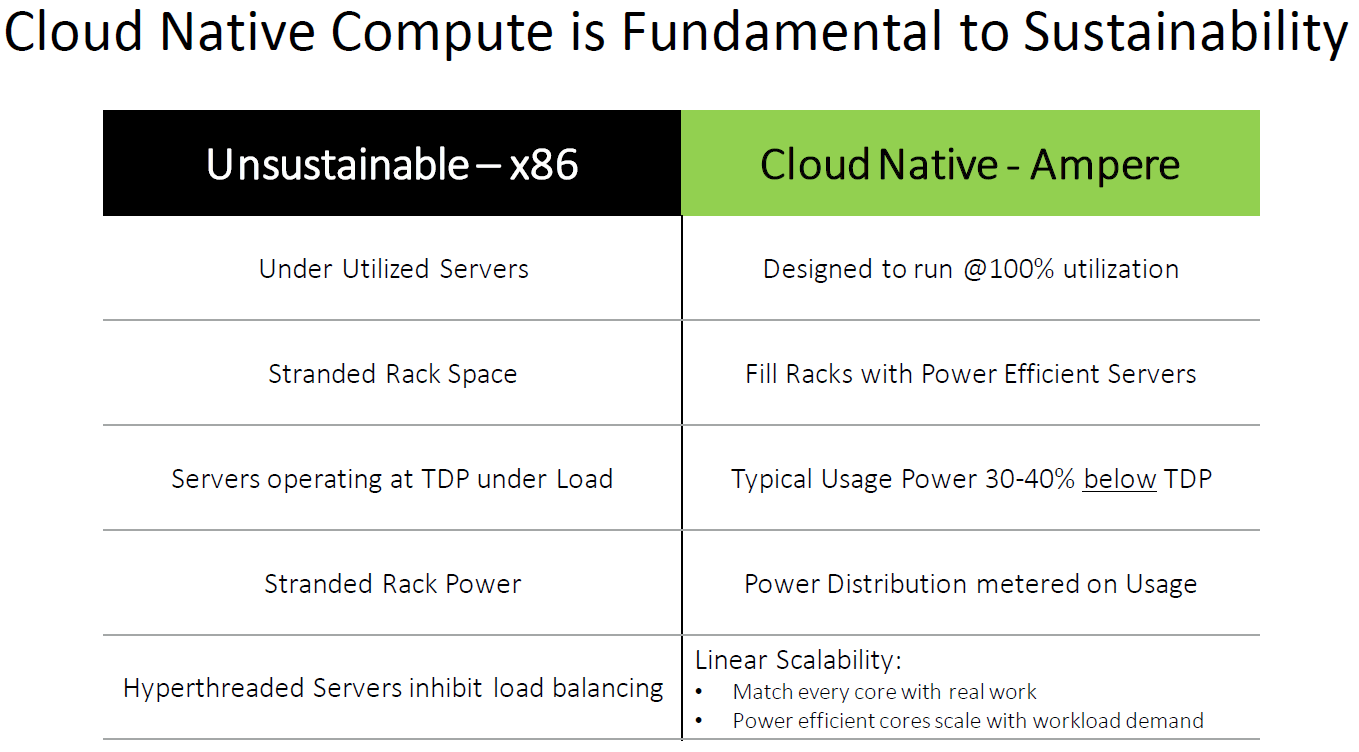

Desk 1: Benefits of cloud local processing with Ampere cloud local platforms in comparison to legacy x86 clouds.

Cloud local applied sciences like Ampere’s allow CSPs to proceed to extend compute density within the datacenter (see Desk 1). On the identical time, cloud local platforms supply a compelling efficiency/value/energy merit, enabling builders to scale back daily running prices whilst accelerating efficiency.

In Section 2 of this sequence, we can take an in depth take a look at what it takes to redeploy present programs to a cloud local platform and boost up your operations.

Take a look at the Ampere Computing Developer Centre for extra related content material and newest information.

[ad_2]