[ad_1]

I don’t know for those who’ve ever used Grammarly’s provider for writing and modifying content material. However when you have, you then unquestionably have observed the characteristic that detects the tone of your writing.

It’s an especially useful instrument! It may be arduous to understand how one thing you write could be perceived via others, and this will assist verify or proper you. Certain, it’s some set of rules doing the paintings, and we all know that no longer all AI-driven stuff is completely correct. However as a intestine test, it’s truly helpful.

Now believe with the ability to do the similar factor with audio recordsdata. How neat wouldn’t it be to grasp the underlying sentiments captured in audio recordings? Podcasters particularly may stand to get pleasure from a device like that, to not point out customer support groups and lots of different fields.

That’s what we’re going to accomplish on this article.

The theory is somewhat easy:

- Add an audio report.

- Convert the content material from speech to textual content.

- Generate a rating that signifies the kind of sentiment it communicates.

However how will we if truth be told construct an interface that does all that? I’m going to introduce you to a few equipment and display how they paintings in combination to create an audio sentiment analyzer.

However First: Why Audio Sentiment Research?

Through harnessing the functions of an audio sentiment evaluation instrument, builders and information execs can discover precious insights from audio recordings, revolutionizing the way in which we interpret feelings and sentiments within the virtual age. Customer support, for instance, is a very powerful for companies aiming to ship personable studies. We will surpass the restrictions of text-based evaluation to get a greater thought of the emotions communicated via verbal exchanges in quite a lot of settings, together with:

- Name facilities

Name middle brokers can achieve real-time insights into buyer sentiment, enabling them to offer personalised and empathetic make stronger. - Voice assistants

Corporations can strengthen their herbal language processing algorithms to ship extra correct responses to buyer questions. - Surveys

Organizations can achieve precious insights and perceive buyer pleasure ranges, determine spaces of growth, and make data-driven selections to beef up total buyer revel in.

And that’s simply the end of the iceberg for one trade. Audio sentiment evaluation gives precious insights throughout more than a few industries. Believe healthcare as any other instance. Audio evaluation may beef up affected person care and strengthen doctor-patient interactions. Healthcare suppliers can achieve a deeper working out of affected person comments, determine spaces for growth, and optimize the whole affected person revel in.

Marketplace analysis is any other space that might get pleasure from audio evaluation. Researchers can leverage sentiments to achieve precious insights right into a target market’s reactions which may be utilized in the entirety from competitor analyses to emblem refreshes with the usage of audio speech records from interviews, center of attention teams, and even social media interactions the place audio is used.

I will be able to additionally see audio evaluation getting used within the design procedure. Like, as an alternative of asking stakeholders to put in writing responses, how about asking them to file their verbal reactions and operating the ones thru an audio evaluation instrument? The probabilities are unending!

The Technical Foundations Of Audio Sentiment Research

Let’s discover the technical foundations that underpin audio sentiment evaluation. We can delve into system finding out for herbal language processing (NLP) duties and glance into Streamlit as a internet utility framework. Those very important elements lay the groundwork for the audio analyzer we’re making.

Herbal Language Processing

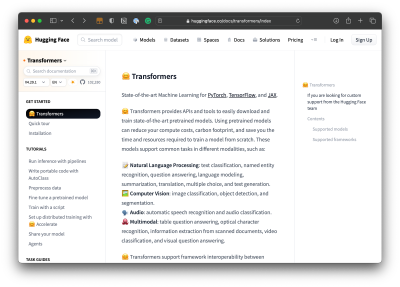

In our mission, we leverage the Hugging Face Transformers library, a a very powerful part of our building toolkit. Evolved via Hugging Face, the Transformers library equips builders with an unlimited number of pre-trained fashions and complex tactics, enabling them to extract precious insights from audio records.

With Transformers, we will provide our audio analyzer being able to classify textual content, acknowledge named entities, solution questions, summarize textual content, translate, and generate textual content. Maximum significantly, it additionally supplies speech reputation and audio classification functions. Mainly, we get an API that faucets into pre-trained fashions in order that our AI instrument has a kick off point relatively than us having to coach it ourselves.

UI Framework And Deployments

Streamlit is a internet framework that simplifies the method of creating interactive records packages. What I love about it’s that it supplies a set of predefined elements that works neatly within the command line with the remainder of the equipment we’re the usage of for the audio analyzer, to not point out we will deploy immediately to their provider to preview our paintings. It’s no longer required, as there could also be different frameworks you might be extra aware of.

Development The App

Now that we’ve established the 2 core elements of our technical basis, we will be able to subsequent discover implementation, similar to

- Putting in the improvement atmosphere,

- Acting sentiment evaluation,

- Integrating speech reputation,

- Development the person interface, and

- Deploying the app.

Preliminary Setup

We start via uploading the libraries we’d like:

import os

import traceback

import streamlit as st

import speech_recognition as sr

from transformers import pipeline

We import os for device operations, traceback for error dealing with, streamlit (st) as our UI framework and for deployments, speech_recognition (sr) for audio transcription, and pipeline from Transformers to accomplish sentiment evaluation the usage of pre-trained fashions.

The mission folder generally is a lovely easy unmarried listing with the next recordsdata:

app.py: The principle script report for the Streamlit utility.necessities.txt: Report specifying mission dependencies.README.md: Documentation report offering an summary of the mission.

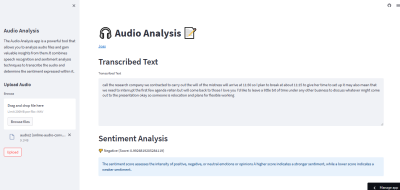

Developing The Consumer Interface

Subsequent, we arrange the format, courtesy of Streamlit’s framework. We will create a spacious UI via calling a large format:

st.set_page_config(format="large")

This guarantees that the person interface supplies abundant house for showing effects and interacting with the instrument.

Now let’s upload some components to the web page the usage of Streamlit’s purposes. We will upload a name and write some textual content:

// app.py

st.name("🎧 Audio Research 📝")

st.write("[Joas](https://huggingface.co/Pontonkid)")

I’d like so as to add a sidebar to the format that may grasp an outline of the app in addition to the shape keep an eye on for importing an audio report. We’ll use the primary space of the format to show the audio transcription and sentiment rating.

Right here’s how we upload a sidebar with Streamlit:

// app.py

st.sidebar.name("Audio Research")

st.sidebar.write("The Audio Research app is a formidable instrument that permits you to analyze audio recordsdata and achieve precious insights from them. It combines speech reputation and sentiment evaluation tactics to transcribe the audio and decide the sentiment expressed inside it.")

And right here’s how we upload the shape keep an eye on for importing an audio report:

// app.py

st.sidebar.header("Add Audio")

audio_file = st.sidebar.file_uploader("Browse", kind=["wav"])

upload_button = st.sidebar.button("Add")

Understand that I’ve arrange the file_uploader() so it most effective accepts WAV audio recordsdata. That’s only a choice, and you’ll be able to specify the precise forms of recordsdata you need to make stronger. Additionally, understand how I added an Add button to begin the add procedure.

Inspecting Audio Recordsdata

Right here’s the thrill phase, the place we get to extract textual content from an audio report, analyze it, and calculate a rating that measures the sentiment stage of what’s mentioned within the audio.

The plan is the next:

- Configure the instrument to make use of a pre-trained NLP type fetched from the Hugging Face fashions hub.

- Combine Transformers’

pipelineto accomplish sentiment evaluation at the transcribed textual content. - Print the transcribed textual content.

- Go back a rating in keeping with the evaluation of the textual content.

In step one, we configure the instrument to leverage a pre-trained type:

// app.py

def perform_sentiment_analysis(textual content):

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

This issues to a type within the hub referred to as DistilBERT. I find it irresistible as it’s desirous about textual content classification and is lovely light-weight in comparison to a few different fashions, making it supreme for an instructional like this. However there are quite a lot of different fashions to be had in Transformers in the market to believe.

Now we combine the pipeline() serve as that does the sentiment evaluation:

// app.py

def perform_sentiment_analysis(textual content):

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

sentiment_analysis = pipeline("sentiment-analysis", type=model_name)

We’ve set that as much as carry out a sentiment evaluation in keeping with the DistilBERT type we’re the usage of.

Subsequent up, outline a variable for the textual content that we get again from the evaluation:

// app.py

def perform_sentiment_analysis(textual content):

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

sentiment_analysis = pipeline("sentiment-analysis", type=model_name)

effects = sentiment_analysis(textual content)

From there, we’ll assign variables for the rating label and the rating itself earlier than returning it to be used:

// app.py

def perform_sentiment_analysis(textual content):

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

sentiment_analysis = pipeline("sentiment-analysis", type=model_name)

effects = sentiment_analysis(textual content)

sentiment_label = effects[0]['label']

sentiment_score = effects[0]['score']

go back sentiment_label, sentiment_score

That’s our whole perform_sentiment_analysis() serve as!

Transcribing Audio Recordsdata

Subsequent, we’re going to transcribe the content material within the audio report into simple textual content. We’ll do this via defining a transcribe_audio() serve as that makes use of the speech_recognition library to transcribe the uploaded audio report:

// app.py

def transcribe_audio(audio_file):

r = sr.Recognizer()

with sr.AudioFile(audio_file) as supply:

audio = r.file(supply)

transcribed_text = r.recognize_google(audio)

go back transcribed_text

We initialize a recognizer object (r) from the speech_recognition library and open the uploaded audio report the usage of the AudioFile serve as. We then file the audio the usage of r.file(supply). In the end, we use the Google Speech Popularity API thru r.recognize_google(audio) to transcribe the audio and procure the transcribed textual content.

In a primary() serve as, we first test if an audio report is uploaded and the add button is clicked. If each prerequisites are met, we continue with audio transcription and sentiment evaluation.

// app.py

def primary():

if audio_file and upload_button:

check out:

transcribed_text = transcribe_audio(audio_file)

sentiment_label, sentiment_score = perform_sentiment_analysis(transcribed_text)

Integrating Information With The UI

We have now the entirety we wish to show a sentiment evaluation for an audio report in our app’s interface. We have now the report uploader, a language type to coach the app, a serve as for transcribing the audio into textual content, and some way to go back a rating. All we wish to do now’s hook it as much as the app!

What I’m going to do is ready up two headers and a textual content space from Streamlit, in addition to variables for icons that constitute the sentiment rating effects:

// app.py

st.header("Transcribed Textual content")

st.text_area("Transcribed Textual content", transcribed_text, peak=200)

st.header("Sentiment Research")

negative_icon = "👎"

neutral_icon = "😐"

positive_icon = "👍"

Let’s use conditional statements to show the sentiment rating in keeping with which label corresponds to the returned consequence. If a sentiment label is empty, we use st.empty() to go away the segment clean.

// app.py

if sentiment_label == "NEGATIVE":

st.write(f"{negative_icon} Unfavourable (Rating: {sentiment_score})", unsafe_allow_html=True)

else:

st.empty()

if sentiment_label == "NEUTRAL":

st.write(f"{neutral_icon} Impartial (Rating: {sentiment_score})", unsafe_allow_html=True)

else:

st.empty()

if sentiment_label == "POSITIVE":

st.write(f"{positive_icon} Sure (Rating: {sentiment_score})", unsafe_allow_html=True)

else:

st.empty()

Streamlit has a to hand st.data() component for showing informational messages and statuses. Let’s faucet into that to show an evidence of the sentiment rating effects:

// app.py

st.data(

"The sentiment rating measures how strongly sure, destructive, or impartial the emotions or evaluations are."

"A better rating signifies a good sentiment, whilst a decrease rating signifies a destructive sentiment."

)

We will have to account for error dealing with, proper? If any exceptions happen all the way through the audio transcription and sentiment evaluation processes, they’re stuck in an aside from block. We show an error message the usage of Streamlit’s st.error() serve as to tell customers about the problem, and we additionally print the exception traceback the usage of traceback.print_exc():

// app.py

aside from Exception as ex:

st.error("Error befell all the way through audio transcription and sentiment evaluation.")

st.error(str(ex))

traceback.print_exc()

This code block guarantees that the app’s primary() serve as is completed when the script is administered as the primary program:

// app.py

if __name__ == "__main__": primary()

It’s commonplace follow to wrap the execution of the primary good judgment inside this situation to forestall it from being completed when the script is imported as a module.

Deployments And Webhosting

Now that we have got effectively constructed our audio sentiment evaluation instrument, it’s time to deploy it and submit it reside. For comfort, I’m the usage of the Streamlit Neighborhood Cloud for deployments since I’m already the usage of Streamlit as a UI framework. That mentioned, I do suppose it’s an improbable platform as it’s unfastened and lets you percentage your apps lovely simply.

However earlier than we continue, there are a couple of must haves:

- GitHub account

Should you don’t have already got one, create a GitHub account. GitHub will function our code repository that connects to the Streamlit Neighborhood Cloud. That is the place Streamlit will get the app recordsdata to serve. - Streamlit Neighborhood Cloud account

Join a Streamlit Cloud so you’ll be able to deploy to the cloud.

After getting your accounts arrange, it’s time to dive into the deployment procedure:

- Create a GitHub repository.

Create a brand new repository on GitHub. This repository will function a central hub for managing and participating at the codebase. - Create the Streamlit utility.

Log into Streamlit Neighborhood Cloud and create a brand new utility mission, offering main points just like the identify and pointing the app to the GitHub repository with the app recordsdata. - Configure deployment settings.

Customise the deployment atmosphere via specifying a Python model and defining atmosphere variables.

That’s it! From right here, Streamlit will mechanically construct and deploy our utility when new adjustments are driven to the primary department of the GitHub repository. You’ll be able to see a case in point of the audio analyzer I created: Are living Demo.

Conclusion

There you’ve gotten it! You may have effectively constructed and deployed an app that acknowledges speech in audio recordsdata, transcribes that speech into textual content, analyzes the textual content, and assigns a rating that signifies whether or not the whole sentiment of the speech is sure or destructive.

We used a tech stack that most effective is composed of a language type (Transformers) and a UI framework (Streamlit) that has built-in deployment and internet hosting functions. That’s truly all we had to pull the entirety in combination!

So, what’s subsequent? Consider taking pictures sentiments in genuine time. That would open up new avenues for immediate insights and dynamic packages. It’s an exhilarating alternative to push the bounds and take this audio sentiment evaluation experiment to the following stage.

Additional Studying on Smashing Mag

(gg, yk, il)

[ad_2]