[ad_1]

On this fast tip, excerpted from Helpful Python, Stuart presentations you the way simple it’s to make use of an HTTP API from Python the use of a few third-party modules.

As a rule when operating with third-party information we’ll be getting access to an HTTP API. This is, we’ll be making an HTTP name to a internet web page designed to be learn by way of machines reasonably than by way of other folks. API information is usually in a machine-readable structure—generally both JSON or XML. (If we come throughout information in every other structure, we will use the ways described in different places on this guide to transform it to JSON, after all!) Let’s have a look at methods to use an HTTP API from Python.

The overall ideas of the use of an HTTP API are easy:

- Make an HTTP name to the URLs for the API, most likely together with some authentication knowledge (reminiscent of an API key) to turn that we’re licensed.

- Get again the knowledge.

- Do one thing helpful with it.

Python supplies sufficient capability in its usual library to do all this with none further modules, however it’ll make our lifestyles so much more straightforward if we pick out up a few third-party modules to clean over the method. The primary is the requests module. That is an HTTP library for Python that makes fetching HTTP information extra delightful than Python’s integrated urllib.request, and it may be put in with python -m pip set up requests.

To turn how simple it’s to make use of, we’ll use Pixabay’s API (documented right here). Pixabay is a inventory photograph web page the place the pictures are all to be had for reuse, which makes it an excessively at hand vacation spot. What we’ll focal point on here’s fruit. We’ll use the fruit photos we acquire afterward, when manipulating recordsdata, however for now we simply wish to in finding photos of fruit, as it’s tasty and just right for us.

To start out, we’ll take a handy guide a rough have a look at what photos are to be had from Pixabay. We’ll seize 100 photographs, briefly glance thru them, and make a choice those we wish. For this, we’ll desire a Pixabay API key, so we wish to create an account after which seize the important thing proven within the API documentation below “Seek Pictures”.

The requests Module

The elemental model of creating an HTTP request to an API with the requests module comes to setting up an HTTP URL, soliciting for it, after which studying the reaction. Right here, that reaction is in JSON structure. The requests module makes every of those steps simple. The API parameters are a Python dictionary, a get() serve as makes the decision, and if the API returns JSON, requests makes that to be had as .json at the reaction. So a easy name will appear to be this:

import requests

PIXABAY_API_KEY = "11111111-7777777777777777777777777"

base_url = "https://pixabay.com/api/"

base_params = {

"key": PIXABAY_API_KEY,

"q": "fruit",

"image_type": "photograph",

"class": "meals",

"safesearch": "true"

}

reaction = requests.get(base_url, params=base_params)

effects = reaction.json()

This may increasingly go back a Python object, because the API documentation suggests, and we will have a look at its portions:

>>> print(len(effects["hits"]))

20

>>> print(effects["hits"][0])

{'identification': 2277, 'pageURL': 'https://pixabay.com/footage/berries-fruits-food-blackberries-2277/', 'kind': 'photograph', 'tags': 'berries, culmination, meals', 'previewURL': 'https://cdn.pixabay.com/photograph/2010/12/13/10/05/berries-2277_150.jpg', 'previewWidth': 150, 'previewHeight': 99, 'webformatURL': 'https://pixabay.com/get/gc9525ea83e582978168fc0a7d4f83cebb500c652bd3bbe1607f98ffa6b2a15c70b6b116b234182ba7d81d95a39897605_640.jpg', 'webformatWidth': 640, 'webformatHeight': 426, 'largeImageURL': 'https://pixabay.com/get/g26eb27097e94a701c0569f1f77ef3975cf49af8f47e862d3e048ff2ba0e5e1c2e30fadd7a01cf2de605ab8e82f5e68ad_1280.jpg', 'imageWidth': 4752, 'imageHeight': 3168, 'imageSize': 2113812, 'perspectives': 866775, 'downloads': 445664, 'collections': 1688, 'likes': 1795, 'feedback': 366, 'user_id': 14, 'person': 'PublicDomainPictures', 'userImageURL': 'https://cdn.pixabay.com/person/2012/03/08/00-13-48-597_250x250.jpg'}

The API returns 20 hits according to web page, and we’d like 100 effects. To do that, we upload a web page parameter to our listing of params. Then again, we don’t wish to regulate our base_params each time, so find out how to means that is to create a loop after which make a reproduction of the base_params for every request. The integrated reproduction module does precisely this, so we will name the API 5 occasions in a loop:

for web page in vary(1, 6):

this_params = reproduction.reproduction(base_params)

this_params["page"] = web page

reaction = requests.get(base_url, params=params)

This may increasingly make 5 separate requests to the API, one with web page=1, the following with web page=2, and so forth, getting other units of symbol effects with every name. This can be a handy option to stroll thru a big set of API effects. Maximum APIs put in force pagination, the place a unmarried name to the API best returns a restricted set of effects. We then ask for extra pages of effects—just like taking a look thru question effects from a seek engine.

Since we wish 100 effects, shall we merely make a decision that that is 5 calls of 20 effects every, however it could be extra tough to stay soliciting for pages till now we have the hundred effects we’d like after which prevent. This saves the calls in case Pixabay adjustments the default choice of effects to fifteen or an identical. It additionally shall we us deal with the location the place there aren’t 100 photographs for our seek phrases. So now we have a whilst loop and increment the web page quantity each time, after which, if we’ve reached 100 photographs, or if there are not any photographs to retrieve, we escape of the loop:

photographs = []

web page = 1

whilst len(photographs) < 100:

this_params = reproduction.reproduction(base_params)

this_params["page"] = web page

reaction = requests.get(base_url, params=this_params)

if now not reaction.json()["hits"]: destroy

for lead to reaction.json()["hits"]:

photographs.append({

"pageURL": outcome["pageURL"],

"thumbnail": outcome["previewURL"],

"tags": outcome["tags"],

})

web page += 1

This manner, once we end, we’ll have 100 photographs, or we’ll have all of the photographs if there are fewer than 100, saved within the photographs array. We will then cross directly to do one thing helpful with them. However sooner than we do this, let’s discuss caching.

Caching HTTP Requests

It’s a good suggestion to steer clear of making the similar request to an HTTP API greater than as soon as. Many APIs have utilization limits so as to steer clear of them being overtaxed by way of requesters, and a request takes effort and time on their section and on ours. We will have to take a look at not to make wasteful requests that we’ve completed sooner than. Thankfully, there’s an invaluable approach to do that when the use of Python’s requests module: set up requests-cache with python -m pip set up requests-cache. This may increasingly seamlessly report any HTTP calls we make and save the effects. Then, later, if we make the similar name once more, we’ll get again the in the neighborhood stored outcome with out going to the API for it in any respect. This protects each time and bandwidth. To make use of requests_cache, import it and create a CachedSession, after which as a substitute of requests.get use consultation.get to fetch URLs, and we’ll get the good thing about caching with out a further effort:

import requests_cache

consultation = requests_cache.CachedSession('fruit_cache')

...

reaction = consultation.get(base_url, params=this_params)

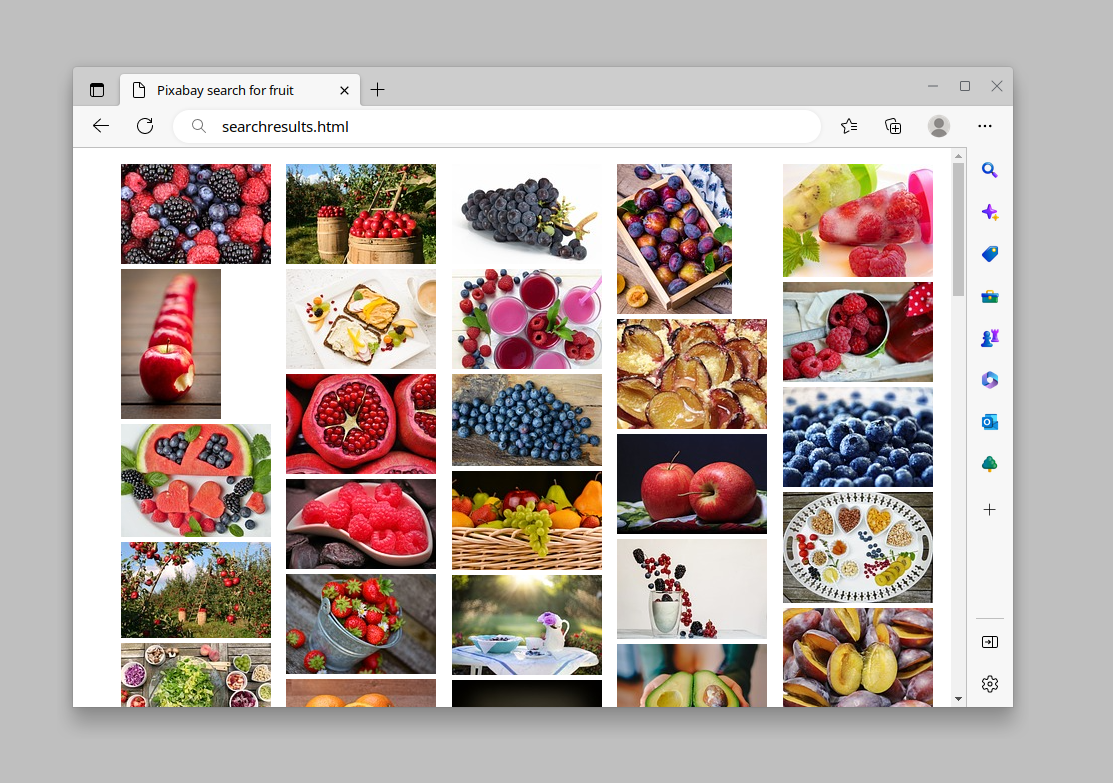

Making Some Output

To look the result of our question, we wish to show the pictures someplace. A handy approach to do that is to create a easy HTML web page that presentations every of the pictures. Pixabay supplies a small thumbnail of every symbol, which it calls previewURL within the API reaction, so shall we put in combination an HTML web page that presentations all of those thumbnails and hyperlinks them to the primary Pixabay web page—from which shall we make a choice to obtain the pictures we wish and credit score the photographer. So every symbol within the web page would possibly appear to be this:

<li>

<a href="https://pixabay.com/footage/berries-fruits-food-blackberries-2277/">

<img src="https://cdn.pixabay.com/photograph/2010/12/13/10/05/berries-2277_150.jpg" alt="berries, culmination, meals">

</a>

</li>

We will assemble that from our photographs listing the use of a listing comprehension, after which sign up for in combination all of the effects into one giant string with "n".sign up for():

html_image_list = [

f"""<li>

<a href="https://www.sitepoint.com/python-fetching-data-http-api/{image["pageURL"]}">

<img src="https://www.sitepoint.com/python-fetching-data-http-api/{symbol["thumbnail']}" alt="https://www.sitepoint.com/python-fetching-data-http-api/{symbol["tags"]}">

</a>

</li>

"""

for symbol in photographs

]

html_image_list = "n".sign up for(html_image_list)

At that time, if we write out an excessively undeniable HTML web page containing that listing, it’s simple to open that during a internet browser for a fast assessment of all of the seek effects we were given from the API, and click on any one in every of them to leap to the entire Pixabay web page for downloads:

html = f"""<!doctype html>

<html><head><meta charset="utf-8">

<identify>Pixabay seek for {base_params['q']}</identify>

<genre>

ul {{

list-style: none;

line-height: 0;

column-count: 5;

column-gap: 5px;

}}

li {{

margin-bottom: 5px;

}}

</genre>

</head>

<frame>

<ul>

{html_image_list}

</ul>

</frame></html>

"""

output_file = f"searchresults-{base_params['q']}.html"

with open(output_file, mode="w", encoding="utf-8") as fp:

fp.write(html)

print(f"Seek effects abstract written as {output_file}")

This newsletter is excerpted from Helpful Python, to be had on SitePoint Top rate and from book shops.

[ad_2]