[ad_1]

What Is Node.js?

- Node.js is an open-source, server-side runtime atmosphere constructed at the V8 JavaScript engine advanced by means of Google to be used in Chrome internet browsers. It permits builders to run JavaScript code outdoor of a internet browser, making it imaginable to make use of JavaScript for server-side scripting and development scalable community packages.

- Node.js makes use of a non-blocking, event-driven I/O type, making it extremely environment friendly and well-suited for dealing with more than one concurrent connections and I/O operations. This event-driven structure, in conjunction with its single-threaded nature, permits Node.js to deal with many connections successfully, making it excellent for real-time packages, chat products and services, APIs, and internet servers with top concurrency necessities.

- One of the vital key benefits of Node.js is that it permits builders to make use of the similar language (JavaScript) on each the server and consumer aspects, simplifying the advance procedure and making it more uncomplicated to proportion code between the front-end and back-end.

- Node.js has a colourful ecosystem with an unlimited array of third-party applications to be had via its equipment supervisor, npm, which makes it simple to combine further functionalities into your packages.

Total, Node.js has grow to be immensely in style and broadly followed for internet building because of its pace, scalability, and versatility, making it an impressive device for development fashionable, real-time internet packages and products and services.

Successfully Dealing with Duties With an Match-Pushed, Asynchronous Means

Believe you’re a chef in a hectic eating place, and lots of orders are coming in from other tables.

- Match-Pushed: As an alternative of looking ahead to one order to be cooked and served sooner than taking the following one, you have got a notepad the place you briefly jot down every desk’s order because it arrives. Then you get ready every dish separately every time you have got time.

- Asynchronous: While you’re cooking a dish that takes a while, like baking a pizza, you do not simply look ahead to it to be in a position. As an alternative, you get started making ready the following dish whilst the pizza is within the oven. This manner, you’ll be able to deal with more than one orders concurrently and make the most productive use of your time.

In a similar fashion, in Node.js, when it receives requests from customers or wishes to accomplish time-consuming duties like studying information or making community requests, it does not look ahead to every request to complete sooner than dealing with the following one. It briefly notes down what must be completed and strikes directly to the following assignment. As soon as the time-consuming duties are completed, Node.js is going again and completes the paintings for every request separately, successfully managing more than one duties at the same time as with out getting caught ready.

This event-driven asynchronous manner in Node.js permits this system to deal with many duties or requests concurrently, identical to a chef managing and cooking more than one orders without delay in a bustling eating place. It makes Node.js extremely responsive and environment friendly, making it an impressive device for development rapid and scalable packages.

Dealing with Duties With Pace and Potency

Believe you have got two tactics to deal with many duties without delay, like serving to a lot of people with their questions.

- Node.js is sort of a super-fast, good helper who can deal with many questions on the identical time with out getting crushed. It briefly listens to every individual, writes down their request, and easily strikes directly to the following individual whilst looking ahead to solutions. This manner, it successfully manages many requests with out getting caught on one for too lengthy.

- Multi-threaded Java is like having a bunch of helpers, the place every helper can deal with one query at a time. Each time any individual comes with a query, they assign a separate helper to help that individual. On the other hand, if too many of us arrive without delay, the helpers may get a bit of crowded, and a few other people would possibly want to look ahead to their flip.

So, Node.js is superb for briefly dealing with many duties without delay, like real-time packages or chat products and services. Then again, multi-threaded Java is healthier for dealing with extra advanced duties that want a large number of calculations or information processing. The selection is determined by what sort of duties you want to deal with.

How To Set up Nodejs

To put in Node.js, you’ll be able to apply those steps relying for your running gadget:

Set up Node.js on Home windows:

Seek advice from the authentic Node.js web site.

- At the homepage, you’re going to see two variations to be had for obtain: LTS (Lengthy-Time period Reinforce) and Present. For many customers, it is really helpful to obtain the LTS model as it’s extra solid.

- Click on at the “LTS” button to obtain the installer for the LTS model.

- Run the downloaded installer and apply the set up wizard.

- All over the set up, you’ll be able to make a selection the default settings or customise the set up trail if wanted. As soon as the set up is entire, you’ll be able to examine the set up by means of opening the Command Urged or PowerShell and typing node -v and npm -v to test the put in Node.js model and npm (Node Bundle Supervisor) model, respectively.

Set up Node.js on macOS:

- Seek advice from the authentic Node.js web site.

- At the homepage, you’re going to see two variations to be had for obtain: LTS (Lengthy-Time period Reinforce) and Present. For many customers, it is really helpful to obtain the LTS model as it’s extra solid.

- Click on at the “LTS” button to obtain the installer for the LTS model.

- Run the downloaded installer and apply the set up wizard. As soon as the set up is entire, you’ll be able to examine the set up by means of opening Terminal and typing node -v and npm -v to test the put in Node.js model and npm model, respectively.

Set up Node.js on Linux:

The technique to set up Node.js on Linux can range according to the distribution you might be the use of. Under are some normal directions:

The use of Bundle Supervisor (Advisable):

- For Debian/Ubuntu-based distributions, open Terminal and run:

sudo apt replace

sudo apt set up nodejs npm

- For Pink Hat/Fedora-based distributions, open Terminal and run:

sudo dnf set up nodejs npm

- For Arch Linux, open Terminal and run:

sudo pacman -S nodejs npm

The use of Node Model Supervisor (nvm):

However, you'll be able to use nvm (Node Model Supervisor) to regulate Node.js variations on Linux. This permits you to simply transfer between other Node.js variations. First, set up nvm by means of operating the next command in Terminal:

curl -o- https://uncooked.githubusercontent.com/nvm-sh/nvm/v0.39.0/set up.sh | bash

Make sure you shut and reopen the terminal after set up or run supply ~/.bashrc or supply ~/.zshrc relying for your shell.

Now, you'll be able to set up the newest LTS model of Node.js with:

nvm set up --lts

To change to the LTS model:

nvm use --lts

You'll be able to examine the set up by means of typing node -v and npm -v.

Whichever way you select, as soon as Node.js is put in, you'll be able to get started development and operating Node.js packages for your gadget.Very important Node.js Modules: Construction Powerful Packages With Reusable Code

In Node.js, modules are reusable items of code that may be exported and imported into different portions of your utility. They’re an very important a part of the Node.js ecosystem and lend a hand in organizing and structuring huge packages. Listed here are some key modules in Node.js:

- Integrated Core Modules: Node.js comes with a number of core modules that offer very important functionalities. Examples come with:

- fs: For running with the document gadget.

- http: For developing HTTP servers and purchasers.

- trail: For dealing with document paths.

- os: For interacting with the running gadget.

- 3rd-party Modules: The Node.js ecosystem has an unlimited selection of third-party modules to be had throughout the npm (Node Bundle Supervisor) registry. Those modules supply more than a few functionalities, reminiscent of:

- Categorical.js: A well-liked internet utility framework for development internet servers and APIs.

- Mongoose: An ODM (Object Information Mapper) for MongoDB, simplifying database interactions.

- Axios: A library for making HTTP requests to APIs.

- Customized Modules: You’ll be able to create your personal modules in Node.js to encapsulate and reuse particular items of capability throughout your utility. To create a customized module, use the module.exports or exports object to reveal purposes, items, or categories.

- Match Emitter: The occasions module is integrated and lets you create and paintings with customized occasion emitters. This module is particularly helpful for dealing with asynchronous operations and event-driven architectures.

- Readline: The readline module supplies an interface for studying enter from a readable movement, such because the command-line interface (CLI).

- Buffer: The buffer module is used for dealing with binary information, reminiscent of studying or writing uncooked information from a movement.

- Crypto: The crypto module provides cryptographic functionalities like developing hashes, encrypting information, and producing safe random numbers.

- Kid Procedure: The child_process module lets you create and engage with kid processes, permitting you to run exterior instructions and scripts.

- URL: The URL module is helping in parsing and manipulating URLs.

- Util: The util module supplies more than a few software purposes for running with items, formatting strings, and dealing with mistakes. Those are only a few examples of key modules in Node.js. The Node.js ecosystem is constantly evolving, and builders can in finding a variety of modules to resolve more than a few issues and streamline utility building.

Node Bundle Supervisor (NPM): Simplifying Bundle Control in Node.js Initiatives

- Node Bundle Supervisor (NPM) is an integral a part of the Node.js ecosystem.

- As a equipment supervisor, it handles the set up, updating, and elimination of libraries, applications, and dependencies inside of Node.js tasks.

- With NPM, builders can with ease prolong their Node.js packages by means of integrating more than a few frameworks, libraries, software modules, and extra.

- Via using easy instructions like npm set up package-name, builders can without problems incorporate applications into their Node.js tasks.

- Moreover, NPM permits the specification of challenge dependencies within the equipment.json document, streamlining utility sharing and distribution processes along its required dependencies.

Figuring out equipment.json and package-lock.json in Node.js Initiatives

equipment.json and package-lock.json are two very important information utilized in Node.js tasks to regulate dependencies and equipment variations.

- equipment.json: equipment.json is a metadata document that gives details about the Node.js challenge, its dependencies, and more than a few configurations. It’s most often positioned within the root listing of the challenge. While you create a brand new Node.js challenge or upload dependencies to an present one, equipment.json is mechanically generated or up to date. Key knowledge in equipment.json contains:

- Undertaking call, model, and outline.

- Access level of the applying (the principle script to run).

- Listing of dependencies required for the challenge to serve as.

- Listing of building dependencies (devDependencies) wanted all over building, reminiscent of checking out libraries. Builders can manually regulate the equipment.json document so as to add or take away dependencies, replace variations, and outline more than a few scripts for operating duties like checking out, development, or beginning the applying.

- package-lock.json: package-lock.json is every other JSON document generated mechanically by means of NPM. It’s supposed to supply an in depth, deterministic description of the dependency tree within the challenge. The aim of this document is to verify constant, reproducible installations of dependencies throughout other environments. package-lock.json comprises:

- The precise variations of all dependencies and their sub-dependencies used within the challenge.

- The resolved URLs for downloading every dependency.

- Dependency model levels laid out in equipment.json are “locked” to precise variations on this document. When package-lock.json is provide within the challenge, NPM makes use of it to put in dependencies with actual variations, which is helping keep away from unintentional adjustments in dependency variations between installations. Each equipment.json and package-lock.json are a very powerful for Node.js tasks. The previous defines the total challenge configuration, whilst the latter guarantees constant and reproducible dependency installations. It’s best follow to devote each information to model keep an eye on to care for consistency throughout building and deployment environments.

How To Create an Categorical Node.js Utility

res.ship(‘Hi, Categorical!’);

});

// Get started the server

const port = 3000;

app.concentrate(port, () => {

console.log(`Server is operating on http://localhost:${port}`);

});

Save the adjustments to your access level document and run your Categorical app:

node app.js” data-lang=”utility/typescript”>

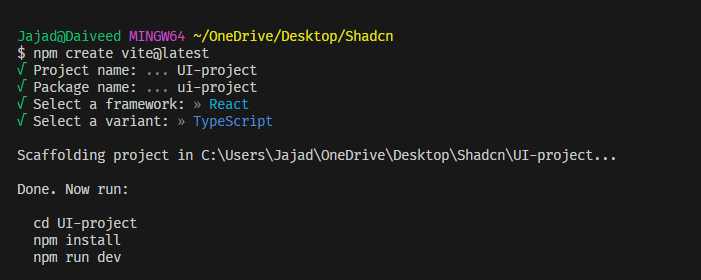

Start by means of developing a brand new listing in your challenge and navigate to it:

mkdir my-express-app

cd my-express-app

Initialize npm to your challenge listing to create a equipment.json document:

npm init

Set up Categorical as a dependency in your challenge:

npm set up specific

Create the principle document (e.g., app.js or index.js) that may function the access level in your Categorical app.

On your access level document, require Categorical and arrange your app by means of defining routes and middleware. Here is a elementary instance:

// app.js

const specific = require('specific');

const app = specific();

// Outline a easy direction

app.get("https://feeds.dzone.com/", (req, res) => {

res.ship('Hi, Categorical!');

});

// Get started the server

const port = 3000;

app.concentrate(port, () => {

console.log(`Server is operating on http://localhost:${port}`);

});

Save the adjustments to your access level document and run your Categorical app:

node app.jsGet entry to your Categorical app by means of opening a internet browser and navigating right here. You must see the message “Hi, Categorical!” displayed. With those steps, you’ve gotten effectively arrange a elementary Categorical Node.js utility. From right here, you’ll be able to additional expand your app by means of including extra routes and middleware and integrating it with databases or different products and services. The authentic Categorical documentation provides a wealth of sources that will help you construct robust and feature-rich packages.

Node.js Undertaking Construction

Create a well-organized equipment construction in your Node.js app. Apply the steered format:

my-node-app

|- app/

|- controllers/

|- fashions/

|- routes/

|- perspectives/

|- products and services/

|- config/

|- public/

|- css/

|- js/

|- photographs/

|- node_modules/

|- app.js (or index.js)

|- equipment.json

Rationalization of the Bundle Construction:

app/: This listing comprises the core parts of your Node.js utility.controllers/: Retailer the good judgment for dealing with HTTP requests and responses. Every controller document must correspond to precise routes or teams of comparable routes.fashions/: Outline information fashions and organize interactions with the database or different information assets.routes/: Outline utility routes and fix them to corresponding controllers. Every direction document manages a selected organization of routes.perspectives/: Area template information if you are the use of a view engine like EJS or Pug.products and services/: Come with provider modules that deal with industry good judgment, exterior API calls, or different advanced operations.config/: Comprise configuration information in your utility, reminiscent of database settings, atmosphere variables, or different configurations.public/: This listing retail outlets static property like CSS, JavaScript, and pictures, which will probably be served to purchasers.node_modules/: The folder the place npm installs dependencies in your challenge. This listing is mechanically created whilst you run npm set up.app.js (or index.js): The principle access level of your Node.js utility, the place you initialize the app and arrange middleware.equipment.json: The document that holds metadata about your challenge and its dependencies. Via adhering to this equipment construction, you’ll be able to care for a well-organized utility because it grows. Keeping apart considerations into distinct directories makes your codebase extra modular, scalable, and more uncomplicated to care for. As your app turns into extra advanced, you’ll be able to enlarge every listing and introduce further ones to cater to precise functionalities.

Key Dependencies for a Node.js Categorical App: Very important Programs and Non-compulsory Parts

Under are the important thing dependencies, together with npm applications, usually utilized in a Node.js Categorical app in conjunction with the REST consumer (axios) and JSON parser (body-parser):

- specific: Categorical.js internet framework

- body-parser: Middleware for parsing JSON and URL-encoded information

- compression: Middleware for gzip compression

- cookie-parser: Middleware for parsing cookies

- axios: REST consumer for making HTTP requests

- ejs (not obligatory): Template engine for rendering dynamic content material

- pug (not obligatory): Template engine for rendering dynamic content material

- express-handlebars (not obligatory): Template engine for rendering dynamic content material

- mongodb (not obligatory): MongoDB driving force for database connectivity

- mongoose (not obligatory): ODM for MongoDB

- sequelize (not obligatory): ORM for SQL databases

- passport (not obligatory): Authentication middleware

- morgan (not obligatory): Logging middleware

Take into account, the inclusion of a few applications like ejs, pug, mongodb, mongoose, sequelize, passport, and morgan is determined by the precise necessities of your challenge. Set up simplest the applications you want in your Node.js Categorical utility.

Figuring out Middleware in Node.js: The Energy of Intermediaries in Internet Packages

- In easy phrases, middleware in Node.js is a instrument element that sits between the incoming request and the outgoing reaction in a internet utility. It acts as a bridge that processes and manipulates information because it flows throughout the utility.

- When a shopper makes a request to a Node.js server, the middleware intercepts the request sooner than it reaches the overall direction handler. It may carry out more than a few duties like logging, authentication, information parsing, error dealing with, and extra. As soon as the middleware finishes its paintings, it both passes the request to the following middleware or sends a reaction again to the customer, successfully finishing its function as an middleman.

- Middleware is an impressive idea in Node.js, because it permits builders so as to add reusable and modular capability to their packages, making the code extra arranged and maintainable. It permits separation of considerations, as other middleware can deal with particular duties, maintaining the direction handlers blank and centered at the primary utility good judgment.

- Now, create an app.js document (or some other filename you like) and upload the next code:

res.ship(‘Hi, that is the house web page!’);

});

// Direction handler for every other endpoint

app.get(‘/about’, (req, res) => {

res.ship(‘That is the about web page.’);

});

// Get started the server

const port = 3000;

app.concentrate(port, () => {

console.log(`Server began on http://localhost:${port}`);

});

” data-lang=”utility/typescript”>

// Import required modules

const specific = require('specific');

// Create an Categorical utility

const app = specific();

// Middleware serve as to log incoming requests

const requestLogger = (req, res, subsequent) => {

console.log(`Gained ${req.way} request for ${req.url}`);

subsequent(); // Name subsequent to cross the request to the following middleware/direction handler

};

// Middleware serve as so as to add a customized header to the reaction

const customHeaderMiddleware = (req, res, subsequent) => {

res.setHeader('X-Customized-Header', 'Hi from Middleware!');

subsequent(); // Name subsequent to cross the request to the following middleware/direction handler

};

// Sign in middleware for use for all routes

app.use(requestLogger);

app.use(customHeaderMiddleware);

// Direction handler for the house web page

app.get("https://feeds.dzone.com/", (req, res) => {

res.ship('Hi, that is the house web page!');

});

// Direction handler for every other endpoint

app.get('/about', (req, res) => {

res.ship('That is the about web page.');

});

// Get started the server

const port = 3000;

app.concentrate(port, () => {

console.log(`Server began on http://localhost:${port}`);

});

On this code, we now have created two middleware purposes: requestLogger and customHeaderMiddleware. The requestLogger logs the main points of incoming requests whilst customHeaderMiddleware provides a customized header to the reaction.

- Those middleware purposes are registered the use of the

app.use()way, which guarantees they’ll be achieved for all incoming requests. Then, we outline two direction handlers the use ofapp.get()to deal with requests for the house web page and the about web page. - While you run this utility and consult with this URL or this URL or to your browser, you’ll be able to see the middleware in motion, logging the req

The way to Unit Check Node.js Categorical App

Unit checking out is very important to verify the correctness and reliability of your Node.js Categorical app. To unit take a look at your app, you’ll be able to use in style checking out frameworks like Mocha and Jest. Here is a step by step information on tips on how to arrange and carry out unit assessments in your Node.js Categorical app:

Step 1: Set up Trying out Dependencies

On your challenge listing, set up the checking out frameworks and comparable dependencies the use of npm or yarn:

npm set up mocha chai supertest --save-devmocha: The checking out framework that lets you outline and run assessments. chai: An statement library that gives more than a few statement kinds to make your assessments extra expressive. supertest: A library that simplifies checking out HTTP requests and responses.

Step 2: Prepare Your App for Trying out

To make your app testable, it is a just right follow to create separate modules for routes, products and services, and some other good judgment that you wish to have to check independently.

Step 3: Write Check Instances

Create take a look at information with .take a look at.js or .spec.js extensions in a separate listing, for instance, assessments/. In those information, outline the take a look at instances for the more than a few parts of your app.

This is an instance take a look at case the use of Mocha, Chai, and Supertest:

be expecting(res).to.have.standing(200);

be expecting(res.textual content).to.equivalent(‘Hi, Categorical!’); // Assuming that is your anticipated reaction

completed();

});

});

});” data-lang=”utility/typescript”>

// assessments/app.take a look at.js

const chai = require('chai');

const chaiHttp = require('chai-http');

const app = require('../app'); // Import your Categorical app right here

// Statement taste and HTTP checking out middleware setup

chai.use(chaiHttp);

const be expecting = chai.be expecting;

describe('Instance Direction Exams', () => {

it('must go back a welcome message', (completed) => {

chai

.request(app)

.get("https://feeds.dzone.com/")

.finish((err, res) => {

be expecting(res).to.have.standing(200);

be expecting(res.textual content).to.equivalent('Hi, Categorical!'); // Assuming that is your anticipated reaction

completed();

});

});

});// Upload extra take a look at instances for different routes, products and services, or modules as wanted.

Step 4: Run Exams:

To run the assessments, execute the next command to your terminal:

npx mocha assessments/*.take a look at.js

The take a look at runner (Mocha) will run all of the take a look at information finishing with .take a look at.js within the assessments/ listing.

Further Pointers

All the time goal to jot down small, remoted assessments that quilt particular situations. Use mocks and stubs when checking out parts that experience exterior dependencies like databases or APIs to keep an eye on the take a look at atmosphere and keep away from exterior interactions. Frequently run assessments all over building and sooner than deploying to verify the steadiness of your app. Via following those steps and writing complete unit assessments, you’ll be able to achieve self assurance within the reliability of your Node.js Categorical app and simply stumble on and attach problems all over building.

Dealing with Asynchronous Operations in JavaScript and TypeScript: Callbacks, Guarantees, and Async/Watch for

Asynchronous operations in JavaScript and TypeScript can also be controlled via other ways: callbacks, Guarantees, and async/watch for. Every manner serves the aim of dealing with non-blocking duties however with various syntax and methodologies. Let’s discover those variations:

Callbacks

Callbacks constitute the normal way for dealing with asynchronous operations in JavaScript. They contain passing a serve as as a controversy to an asynchronous serve as, which will get achieved upon of entirety of the operation. Callbacks permit you to deal with the end result or error of the operation inside the callback serve as. Instance the use of callbacks:

serve as fetchData(callback) {

// Simulate an asynchronous operation

setTimeout(() => {

const information = { call: 'John', age: 30 };

callback(information);

}, 1000);

}

// The use of the fetchData serve as with a callback

fetchData((information) => {

console.log(information); // Output: { call: 'John', age: 30 }

});Guarantees

Guarantees be offering a extra fashionable strategy to managing asynchronous operations in JavaScript. A Promise represents a price that might not be to be had in an instant however will get to the bottom of to a price (or error) at some point. Guarantees supply strategies like then() and catch() to deal with the resolved price or error. Instance the use of Guarantees:

serve as fetchData() {

go back new Promise((get to the bottom of, reject) => {

// Simulate an asynchronous operation

setTimeout(() => {

const information = { call: 'John', age: 30 };

get to the bottom of(information);

}, 1000);

});

}

// The use of the fetchData serve as with a Promise

fetchData()

.then((information) => {

console.log(information); // Output: { call: 'John', age: 30 }

})

.catch((error) => {

console.error(error);

});Async/Watch for:

Async/watch for is a syntax offered in ES2017 (ES8) that makes dealing with Guarantees extra concise and readable. Via the use of the async key phrase sooner than a serve as declaration, it signifies that the serve as comprises asynchronous operations. The watch for key phrase is used sooner than a Promise to pause the execution of the serve as till the Promise is resolved. Instance the use of async/watch for:

serve as fetchData() {

go back new Promise((get to the bottom of) => {

// Simulate an asynchronous operation

setTimeout(() => {

const information = { call: 'John', age: 30 };

get to the bottom of(information);

}, 1000);

});

}

// The use of the fetchData serve as with async/watch for

async serve as fetchDataAsync() {

take a look at {

const information = watch for fetchData();

console.log(information); // Output: { call: 'John', age: 30 }

} catch (error) {

console.error(error);

}

}

fetchDataAsync();In conclusion, callbacks are the normal way, Guarantees be offering a extra fashionable manner, and async/watch forsupplies a cleaner syntax for dealing with asynchronous operations in JavaScript and TypeScript. Whilst every manner serves the similar objective, the selection is determined by non-public desire and the challenge’s particular necessities. Async/watch for is in most cases thought to be probably the most readable and easy possibility for managing asynchronous code in fashionable JavaScript packages.

The way to Dockerize Node.js App

FROM node:14

ARG APPID=<APP_NAME>

WORKDIR /app

COPY equipment.json package-lock.json ./

RUN npm ci --production

COPY ./dist/apps/${APPID}/ .

COPY apps/${APPID}/src/config ./config/

COPY ./reference/openapi.yaml ./reference/

COPY ./sources ./sources/

ARG PORT=5000

ENV PORT ${PORT}

EXPOSE ${PORT}

COPY .env.template ./.env

ENTRYPOINT ["node", "main.js"]Let’s destroy down the Dockerfile step-by-step:

FROM node:14: It makes use of the authentic Node.js 14 Docker picture as the bottom picture to construct upon.ARG APPID=<APP_NAME>: Defines a controversy named “APPID” with a default price<APP_NAME>. You’ll be able to cross a selected price forAPPIDall over the Docker picture construct if wanted.WORKDIR /app: Units the running listing throughout the container to/app.COPY equipment.json package-lock.json ./: Copies theequipment.jsonandpackage-lock.jsoninformation to the running listing within the container.RUN npm ci --production: Runsnpm cicommand to put in manufacturing dependencies simplest. That is extra environment friendly thannpm set upbecause it leverages thepackage-lock.jsonto verify deterministic installations.COPY ./dist/apps/${APPID}/ .: Copies the construct output (assuming indist/apps/<APP_NAME>) of your Node.js app to the running listing within the container.COPY apps/${APPID}/src/config ./config/: Copies the applying configuration information (fromapps/<APP_NAME>/src/config) to aconfiglisting within the container.COPY ./reference/openapi.yaml ./reference/: Copies theopenapi.yamldocument (probably an OpenAPI specification) to areferencelisting within the container.COPY ./sources ./sources/: Copies thesourceslisting to asourceslisting within the container.ARG PORT=3000: Defines a controversy namedPORTwith a default price of three,000. You’ll be able to set a special price forPORTall over the Docker picture construct if essential.ENV PORT ${PORT}: Units the surroundings variablePORTthroughout the container to the price equipped within thePORTargument or the default price 3,000.EXPOSE ${PORT}: Exposes the port laid out in thePORTatmosphere variable. Which means that this port will probably be to be had to the outdoor global when operating the container.COPY .env.template ./.env: Copies the.env.templatedocument to.envwithin the container. This most likely units up atmosphere variables in your Node.js app.ENTRYPOINT[node,main.js]: Specifies the access level command to run when the container begins. On this case, it runs theprimary.jsdocument the use of the Node.js interpreter.

When development the picture, you’ll be able to cross values for the APPID and PORT arguments in case you have particular app names or port necessities.

Node.js App Deployment: The Energy of Opposite Proxies

- A opposite proxy is an middleman server that sits between consumer units and backend servers.

- It receives consumer requests, forwards them to the proper backend server, and returns the reaction to the customer.

- For Node.js apps, a opposite proxy is very important to toughen safety, deal with load balancing, permit caching, and simplify area and subdomain dealing with. – It complements the app’s efficiency, scalability, and maintainability.

Unlocking the Energy of Opposite Proxies

- Load Balancing: In case your Node.js app receives a top quantity of visitors, you’ll be able to use a opposite proxy to distribute incoming requests amongst more than one cases of your app. This guarantees environment friendly usage of sources and higher dealing with of greater visitors.

- SSL Termination: You’ll be able to offload SSL encryption and decryption to the opposite proxy, relieving your Node.js app from the computational overhead of dealing with SSL/TLS connections. This complements efficiency and permits your app to concentrate on dealing with utility good judgment.

- Caching: Via putting in place caching at the opposite proxy, you’ll be able to cache static property and even dynamic responses out of your Node.js app. This considerably reduces reaction occasions for repeated requests, leading to progressed consumer revel in and lowered load for your app.

- Safety: A opposite proxy acts as a defend, protective your Node.js app from direct publicity to the web. It may filter out and block malicious visitors, carry out charge restricting, and act as a Internet Utility Firewall (WAF) to safeguard your utility.

- URL Rewriting: The opposite proxy can rewrite URLs sooner than forwarding requests in your Node.js app. This permits for cleaner and extra user-friendly URLs whilst maintaining the app’s interior routing intact.

- WebSockets and Lengthy Polling: Some deployment setups require further configuration to deal with WebSockets or lengthy polling connections correctly. A opposite proxy can deal with the essential headers and protocols, enabling seamless real-time communique to your app.

- Centralized Logging and Tracking: Via routing all requests throughout the opposite proxy, you’ll be able to accumulate centralized logs and metrics. This simplifies tracking and research, making it more uncomplicated to trace utility efficiency and troubleshoot problems. Via using a opposite proxy, you’ll be able to profit from those sensible advantages to optimize your Node.js app’s deployment, reinforce safety, and make sure a clean revel in in your customers.

- Area and Subdomain Dealing with: A opposite proxy can organize more than one domains and subdomains pointing to other Node.js apps or products and services at the identical server. This simplifies the setup for internet hosting more than one packages underneath the similar area.

- Situation: You might have a Node.js app serving a weblog and an e-commerce retailer, and you wish to have them out there underneath separate domain names.

- Answer: Use a opposite proxy (e.g., Nginx) to configure domain-based routing:

- Arrange Nginx with two server blocks (digital hosts) for every area: www.myblog.com and store.myecommercestore.com. Level the DNS information of the domain names in your server’s IP cope with.

- Configure the opposite proxy to ahead requests to the corresponding Node.js app operating on other ports (e.g., 3,000 for the weblog, and four,000 for the e-commerce retailer).

- Customers gaining access to www.myblog.com will see the weblog content material, whilst the ones visiting store.myecommercestore.com will engage with the e-commerce retailer.

- The use of a opposite proxy simplifies area dealing with and permits internet hosting more than one apps underneath other domain names at the identical server.

NGINX SEETUP

server {

concentrate 80;

server_name www.myblog.com;

location / {

proxy_pass http://localhost:3000; // Ahead requests to the Node.js app serving the weblog

// Further proxy settings if wanted

}

}

server {

concentrate 80;

server_name store.myecommercestore.com;

location / {

proxy_pass http://localhost:4000; // Ahead requests to the Node.js app serving the e-commerce retailer

// Further proxy settings if wanted

}

}

Seamless Deployments to EC2, ECS, and EKS: Successfully Scaling and Managing Packages on AWS

Amazon EC2 Deployment:

Deploying a Node.js utility to an Amazon EC2 example the use of Docker comes to the next steps:

- Set Up an EC2 Example: Release an EC2 example on AWS, deciding on the proper example sort and Amazon Device Symbol (AMI) according to your wishes. Make sure you configure safety teams to permit incoming visitors at the essential ports (e.g., HTTP on port 80 or HTTPS on port 443).

- Set up Docker on EC2 Example: SSH into the EC2 example and set up Docker. Apply the directions in your Linux distribution. For instance, at the following:

Amazon Linux:

bash

Replica code

sudo yum replace -y

sudo yum set up docker -y

sudo provider docker get started

sudo usermod -a -G docker ec2-user # Change "ec2-user" together with your example's username if it is other.

Replica Your Dockerized Node.js App: Switch your Dockerized Node.js utility to the EC2 example. This can also be completed the use of gear like SCP or SFTP, or you'll be able to clone your Docker challenge immediately onto the server the use of Git.

Run Your Docker Container: Navigate in your app's listing containing the Dockerfile and construct the Docker picture:

bash

Replica code

docker construct -t your-image-name .

Then, run the Docker container from the picture:

bash

Replica code

docker run -d -p 80:3000 your-image-name

This command maps port 80 at the host to port 3000 within the container. Regulate the port numbers as according to your utility's setup.Terraform Code:

This Terraform configuration assumes that you've already containerized your Node.js app and feature it to be had in a Docker picture.

supplier "aws" {

area = "us-west-2" # Trade in your desired AWS area

}

# EC2 Example

useful resource "aws_instance" "example_ec2" {

ami = "ami-0c55b159cbfafe1f0" # Change together with your desired AMI

instance_type = "t2.micro" # Trade example sort if wanted

key_name = "your_key_pair_name" # Trade in your EC2 key pair call

security_groups = ["your_security_group_name"] # Trade in your safety organization call

tags = {

Identify = "example-ec2"

}

}

# Provision Docker and Docker Compose at the EC2 example

useful resource "aws_instance" "example_ec2" {

ami = "ami-0c55b159cbfafe1f0" # Change together with your desired AMI

instance_type = "t2.micro" # Trade example sort if wanted

key_name = "your_key_pair_name" # Trade in your EC2 key pair call

security_groups = ["your_security_group_name"] # Trade in your safety organization call

user_data = <<-EOT

#!/bin/bash

sudo yum replace -y

sudo yum set up -y docker

sudo systemctl get started docker

sudo usermod -aG docker ec2-user

sudo yum set up -y git

git clone <your_repository_url>

cd <your_app_directory>

docker construct -t your_image_name .

docker run -d -p 80:3000 your_image_name

EOT

tags = {

Identify = "example-ec2"

}

}

- Set Up a Opposite Proxy (Non-compulsory): If you wish to use a customized area or deal with HTTPS visitors, configure Nginx or every other opposite proxy server to ahead requests in your Docker container.

- Set Up Area and SSL (Non-compulsory): When you have a customized area, configure DNS settings to indicate in your EC2 example’s public IP or DNS. Moreover, arrange SSL/TLS certificate for HTTPS if you want safe connections.

- Observe and Scale: Put into effect tracking answers to control your app’s efficiency and useful resource utilization. You’ll be able to scale your Docker boxes horizontally by means of deploying more than one cases in the back of a load balancer to deal with greater visitors.

- Backup and Safety: Frequently again up your utility information and enforce security features like firewall laws and common OS updates to verify the protection of your server and information.

- The use of Docker simplifies the deployment procedure by means of packaging your Node.js app and its dependencies right into a container, making sure consistency throughout other environments. It additionally makes scaling and managing your app more uncomplicated, as Docker boxes are light-weight, transportable, and can also be simply orchestrated the use of container orchestration gear like Docker Compose or Kubernetes.

Amazon ECS Deployment

Deploying a Node.js app the use of AWS ECS (Elastic Container Provider) comes to the next steps:

- Containerize Your Node.js App: Bundle your Node.js app right into a Docker container. Create a Dockerfile very similar to the only we mentioned previous on this dialog. Construct and take a look at the Docker picture in the community.

- Create an ECR Repository (Non-compulsory): If you wish to use Amazon ECR (Elastic Container Registry) to retailer your Docker photographs, create an ECR repository to push your Docker picture to it.

- Push Docker Symbol to ECR (Non-compulsory): In case you are the use of ECR, authenticate your Docker consumer to the ECR registry and push your Docker picture to the repository.

- Create a Process Definition: Outline your app’s container configuration in an ECS assignment definition. Specify the Docker picture, atmosphere variables, container ports, and different essential settings.

- Create an ECS Cluster: Create an ECS cluster, which is a logical grouping of EC2 cases the place your boxes will run. You’ll be able to create a brand new cluster or use an present one.

- Set Up ECS Provider: Create an ECS provider that makes use of the duty definition you created previous. The provider manages the specified choice of operating duties (boxes) according to the configured settings (e.g., choice of cases, load balancer, and so forth.).

- Configure Load Balancer (Non-compulsory): If you wish to distribute incoming visitors throughout more than one cases of your app, arrange an Utility Load Balancer (ALB) or Community Load Balancer (NLB) and affiliate it together with your ECS provider.

- Set Up Safety Teams and IAM Roles: Configure safety teams in your ECS cases and arrange IAM roles with suitable permissions in your ECS duties to get admission to different AWS products and services if wanted.

- Deploy and Scale: Deploy your ECS provider, and ECS will mechanically get started operating boxes according to the duty definition. You’ll be able to scale the provider manually or configure auto-scaling laws according to metrics like CPU usage or request rely.

- Observe and Troubleshoot: Observe your ECS provider the use of CloudWatch metrics and logs. Use ECS provider logs and container insights to troubleshoot problems and optimize efficiency. AWS supplies a number of gear like AWS Fargate, AWS App Runner, and AWS Elastic Beanstalk that simplify the ECS deployment procedure additional. Every has its strengths and use instances, so make a selection the only that most nearly fits your utility’s necessities and complexity.

Terraform Code:

supplier "aws" {

area = "us-west-2" # Trade in your desired AWS area

}

# Create an ECR repository (Non-compulsory if the use of ECR)

useful resource "aws_ecr_repository" "example_ecr" {

call = "example-ecr-repo"

}

# ECS Process Definition

useful resource "aws_ecs_task_definition" "example_task_definition" {

kin = "example-task-family"

container_definitions = <<TASK_DEFINITION

[

{

"name": "example-app",

"image": "your_ecr_repository_url:latest", # Use ECR URL or your custom Docker image URL

"memory": 512,

"cpu": 256,

"essential": true,

"portMappings": [

{

"containerPort": 3000, # Node.js app's listening port

"protocol": "tcp"

}

],

"atmosphere": [

{

"name": "NODE_ENV",

"value": "production"

}

// Add other environment variables if needed

]

}

]

TASK_DEFINITION

requires_compatibilities = ["FARGATE"]

network_mode = "awsvpc"

# Non-compulsory: Upload execution function ARN in case your app calls for get admission to to different AWS products and services

# execution_role_arn = "arn:aws:iam::123456789012:function/ecsTaskExecutionRole"

}

# Create an ECS cluster

useful resource "aws_ecs_cluster" "example_cluster" {

call = "example-cluster"

}

# ECS Provider

useful resource "aws_ecs_service" "example_service" {

call = "example-service"

cluster = aws_ecs_cluster.example_cluster.identification

task_definition = aws_ecs_task_definition.example_task_definition.arn

desired_count = 1 # Selection of duties (boxes) you wish to have to run

# Non-compulsory: Upload safety teams, subnet IDs, and cargo balancer settings if the use of ALB/NLB

# security_groups = ["sg-1234567890"]

# load_balancer {

# target_group_arn = "arn:aws:elasticloadbalancing:us-west-2:123456789012:targetgroup/example-target-group/abcdefghij123456"

# container_name = "example-app"

# container_port = 3000

# }

# Non-compulsory: Auto-scaling configuration

# enable_ecs_managed_tags = true

# capacity_provider_strategy {

# capacity_provider = "FARGATE_SPOT"

# weight = 1

# }

# deployment_controller {

# sort = "ECS"

# }

depends_on = [

aws_ecs_cluster.example_cluster,

aws_ecs_task_definition.example_task_definition,

]

}

Amazon EKS Deployment

Deploying a Node.js app to Amazon EKS (Elastic Kubernetes Provider) comes to the next steps:

- Containerize Your Node.js App: Bundle your Node.js app right into a Docker container. Create a Dockerfile very similar to the only we mentioned previous on this dialog. Construct and take a look at the Docker picture in the community.

- Create an ECR Repository (Non-compulsory): If you wish to use Amazon ECR (Elastic Container Registry) to retailer your Docker photographs, create an ECR repository to push your Docker picture to it.

- Push Docker Symbol to ECR (Non-compulsory): In case you are the use of ECR, authenticate your Docker consumer to the ECR registry and push your Docker picture to the repository.

- Create an Amazon EKS Cluster: Use the AWS Control Console, AWS CLI, or Terraform to create an EKS cluster. The cluster will encompass a controlled Kubernetes keep an eye on airplane and employee nodes that run your boxes.

- Set up and Configure kubectl: Set up the kubectl command-line device and configure it to connect with your EKS cluster.

- Deploy Your Node.js App to EKS: Create a Kubernetes Deployment YAML or Helm chart that defines your Node.js app’s deployment configuration, together with the Docker picture, atmosphere variables, container ports, and so forth.

- Practice the Kubernetes Configuration: Use kubectl practice or helm set up (if the use of Helm) to use the Kubernetes configuration in your EKS cluster. This may create the essential Kubernetes sources, reminiscent of Pods and Deployments, to run your app.

- Divulge Your App with a Provider: Create a Kubernetes Provider to reveal your app to the web or different products and services. You’ll be able to use a LoadBalancer provider sort to get a public IP in your app, or use an Ingress controller to regulate visitors and routing in your app.

- Set Up Safety Teams and IAM Roles: Configure safety teams in your EKS employee nodes and arrange IAM roles with suitable permissions in your pods to get admission to different AWS products and services if wanted.

- Observe and Troubleshoot: Observe your EKS cluster and app the use of Kubernetes gear like kubectl, kubectl logs, and kubectl describe. Use AWS CloudWatch and CloudTrail for extra tracking and logging.

- Scaling and Upgrades: EKS supplies computerized scaling in your employee nodes according to the workload. Moreover, you’ll be able to scale your app’s replicas or replace your app to a brand new model by means of making use of new Kubernetes configurations. Take into account to apply absolute best practices for securing your EKS cluster, managing permissions, and optimizing efficiency. AWS supplies a number of controlled products and services and gear to simplify EKS deployments, reminiscent of AWS EKS Controlled Node Teams, AWS Fargate for EKS, and AWS App Mesh for provider mesh functions. Those products and services can lend a hand streamline the deployment procedure and supply further options in your Node.js app operating on EKS.

Deploying an EKS cluster the use of Terraform comes to a number of steps. Under is an instance Terraform code to create an EKS cluster, a Node Staff with employee nodes, and deploy a pattern Kubernetes Deployment and Provider for a Node.js app:

supplier "aws" {

area = "us-west-2" # Trade in your desired AWS area

}

# Create an EKS cluster

useful resource "aws_eks_cluster" "example_cluster" {

call = "example-cluster"

role_arn = aws_iam_role.example_cluster.arn

vpc_config {

subnet_ids = ["subnet-1234567890", "subnet-0987654321"] # Change together with your desired subnet IDs

}

depends_on = [

aws_iam_role_policy_attachment.eks_cluster,

]

}

# Create an IAM function and coverage for the EKS cluster

useful resource "aws_iam_role" "example_cluster" {

call = "example-eks-cluster"

assume_role_policy = jsonencode({

Model = "2012-10-17"

Observation = [

{

Effect = "Allow"

Action = "sts:AssumeRole"

Principal = {

Service = "eks.amazonaws.com"

}

}

]

})

}

useful resource "aws_iam_role_policy_attachment" "eks_cluster" {

policy_arn = "arn:aws:iam::aws:coverage/AmazonEKSClusterPolicy"

function = aws_iam_role.example_cluster.call

}

# Create an IAM function and coverage for the EKS Node Staff

useful resource "aws_iam_role" "example_node_group" {

call = "example-eks-node-group"

assume_role_policy = jsonencode({

Model = "2012-10-17"

Observation = [

{

Effect = "Allow"

Action = "sts:AssumeRole"

Principal = {

Service = "ec2.amazonaws.com"

}

}

]

})

}

useful resource "aws_iam_role_policy_attachment" "eks_node_group" {

policy_arn = "arn:aws:iam::aws:coverage/AmazonEKSWorkerNodePolicy"

function = aws_iam_role.example_node_group.call

}

useful resource "aws_iam_role_policy_attachment" "eks_cni" {

policy_arn = "arn:aws:iam::aws:coverage/AmazonEKS_CNI_Policy"

function = aws_iam_role.example_node_group.call

}

useful resource "aws_iam_role_policy_attachment" "ssm" {

policy_arn = "arn:aws:iam::aws:coverage/AmazonSSMManagedInstanceCore"

function = aws_iam_role.example_node_group.call

}

# Create the EKS Node Staff

useful resource "aws_eks_node_group" "example_node_group" {

cluster_name = aws_eks_cluster.example_cluster.call

node_group_name = "example-node-group"

node_role_arn = aws_iam_role.example_node_group.arn

subnet_ids = ["subnet-1234567890", "subnet-0987654321"] # Change together with your desired subnet IDs

scaling_config {

desired_size = 2

max_size = 3

min_size = 1

}

depends_on = [

aws_eks_cluster.example_cluster,

]

}

# Kubernetes Configuration

information "template_file" "nodejs_deployment" {

template = document("nodejs_deployment.yaml") # Change together with your Node.js app's Kubernetes Deployment YAML

}

information "template_file" "nodejs_service" {

template = document("nodejs_service.yaml") # Change together with your Node.js app's Kubernetes Provider YAML

}

# Deploy the Kubernetes Deployment and Provider

useful resource "kubernetes_deployment" "example_deployment" {

metadata {

call = "example-deployment"

labels = {

app = "example-app"

}

}

spec {

replicas = 2 # Selection of replicas (pods) you wish to have to run

selector {

match_labels = {

app = "example-app"

}

}

template {

metadata {

labels = {

app = "example-app"

}

}

spec {

container {

picture = "your_ecr_repository_url:newest" # Use ECR URL or your customized Docker picture URL

call = "example-app"

port {

container_port = 3000 # Node.js app's listening port

}

# Upload different container configuration if wanted

}

}

}

}

}

useful resource "kubernetes_service" "example_service" {

metadata {

call = "example-service"

}

spec {

selector = {

app = kubernetes_deployment.example_deployment.spec.0.template.0.metadata[0].labels.app

}

port {

port = 80

target_port = 3000 # Node.js app's container port

}

sort = "LoadBalancer" # Use "LoadBalancer" for public get admission to or "ClusterIP" for interior get admission to

}

}

[ad_2]