[ad_1]

In as of late’s dynamic global of internet building, the root upon which we construct our programs is an important. On the middle of many trendy internet programs lies the unsung hero: the database. However how we have interaction with this basis — how we question, form, and manipulate our records — can imply the adaptation between an effective, scalable app and one who buckles below force.

Input the ambitious trio of Node.js, Knex.js, and PostgreSQL. Node.js, with its event-driven structure, guarantees pace and potency. Knex.js, a shining gem within the Node ecosystem, simplifies database interactions, making them extra intuitive and not more error-prone. After which there’s PostgreSQL — a relational database that’s stood the check of time, famend for its robustness and flexibility.

So, why this actual mix of applied sciences? And the way can they be harnessed to craft resilient and dependable database fashions? Adventure with us as we unpack the synergy of Node.js, Knex.js, and PostgreSQL, exploring the myriad techniques they may be able to be leveraged to carry your internet building endeavors.

Preliminary Setup

In a earlier article, I delved into the foundational setup and initiation of products and services the use of Knex.js and Postgres. Alternatively, this text hones in at the intricacies of the style side in provider building. I gained’t be delving into Node.js setups or explaining the intricacies of Knex migrations and seeds on this piece, as all that data is roofed within the earlier article.

Postgres Connection

Anyway, let’s in short create a database the use of docker-compose:

model: '3.6'

volumes:

records:

products and services:

database:

construct:

context: .

dockerfile: postgres.dockerfile

symbol: postgres:newest

container_name: postgres

surroundings:

TZ: Europe/Paris

POSTGRES_DB: ${DB_NAME}

POSTGRES_USER: ${DB_USER}

POSTGRES_PASSWORD: ${DB_PASSWORD}

networks:

- default

volumes:

- records:/var/lib/postgresql/records

ports:

- "5432:5432"

restart: unless-stoppedDocker Compose Database Setup

And for your .env report values for connection:

DB_HOST="localhost"

DB_PORT=5432

DB_NAME="modeldb"

DB_USER="testuser"

DB_PASSWORD="DBPassword"The ones surroundings variables can be utilized in docker-compose report for launching your Postgres database. When all values are able we will begin to run it with docker-compose up.

Kenx Setup

Sooner than diving into Knex.js setup, we’ll be the use of Node.js model 18. To start out crafting fashions, we best want the next dependencies:

"dependencies": {

"dotenv": "^16.3.1",

"specific": "^4.18.2",

"knex": "^2.5.1",

"pg": "^8.11.3"

}

Create knexfile.ts and upload the next content material:

require('dotenv').config();

require('ts-node/sign up');

import sort { Knex } from 'knex';

const environments: string[] = ['development', 'test', 'production'];

const connection: Knex.ConnectionConfig = {

host: procedure.env.DB_HOST as string,

database: procedure.env.DB_NAME as string,

consumer: procedure.env.DB_USER as string,

password: procedure.env.DB_PASSWORD as string,

};

const commonConfig: Knex.Config = {

consumer: 'pg',

connection,

migrations: {

listing: './database/migrations',

},

seeds: {

listing: './database/seeds',

}

};

export default Object.fromEntries(environments.map((env: string) => [env, commonConfig]));Knex Record Configuration

Subsequent, within the root listing of your venture, create a brand new folder named database. Inside this folder, upload a index.ts report. This report will function our primary database connection handler, using the configurations from knexfile. Here is what the content material index.ts must appear to be:

import Knex from 'knex';

import configs from '../knexfile';

export const database = Knex(configs[process.env.NODE_ENV || 'development']);Export database with implemented configs

This setup permits a dynamic database connection in line with the present Node surroundings, making sure that the best configuration is used whether or not you’re in a building, check, or manufacturing environment.

Inside your venture listing, navigate to src/@varieties/index.ts. Right here, we’re going to outline a couple of very important varieties to constitute our records constructions. This may increasingly assist be certain that constant records dealing with during our software. The next code outlines an enumeration of consumer roles and sort definitions for each a consumer and a submit:

export enum Position {

Admin = 'admin',

Consumer="consumer",

}

export sort Consumer = {

e mail: string;

first_name: string;

last_name: string;

position: Position;

};

export sort Publish = {

identify: string;

content material: string;

user_id: quantity;

};Very important Varieties

Those varieties act as a blueprint, enabling you to outline the construction and relationships of your records, making your database interactions extra predictable and not more at risk of mistakes.

After the ones setups, you’ll do migrations and seeds. Run npx knex migrate:make create_users_table:

go back knex.schema.createTable(tableName, (desk: Knex.TableBuilder) => {

desk.increments(‘identity’);

desk.string(‘e mail’).distinctive().notNullable();

desk.string(‘password’).notNullable();

desk.string(‘first_name’).notNullable();

desk.string(‘last_name’).notNullable();

desk.enu(‘position’, [Role.User, Role.Admin]).notNullable();

desk.timestamps(true, true);

});

}

export async serve as down(knex: Knex): Promise

go back knex.schema.dropTable(tableName);

}” data-lang=”software/typescript”>

import { Knex } from "knex";

import { Position } from "../../src/@varieties";

const tableName="customers";

export async serve as up(knex: Knex): Promise<void> {

go back knex.schema.createTable(tableName, (desk: Knex.TableBuilder) => {

desk.increments('identity');

desk.string('e mail').distinctive().notNullable();

desk.string('password').notNullable();

desk.string('first_name').notNullable();

desk.string('last_name').notNullable();

desk.enu('position', [Role.User, Role.Admin]).notNullable();

desk.timestamps(true, true);

});

}

export async serve as down(knex: Knex): Promise<void> {

go back knex.schema.dropTable(tableName);

}

Knex Migration Record for Customers

And npx knex migrate:make create_posts_table:

go back knex.schema.createTable(tableName, (desk: Knex.TableBuilder) => {

desk.increments(‘identity’);

desk.string(‘identify’).notNullable();

desk.string(‘content material’).notNullable();

desk.integer(‘user_id’).unsigned().notNullable();

desk.international(‘user_id’).references(‘identity’).inTable(‘customers’).onDelete(‘CASCADE’);

desk.timestamps(true, true);

});

}

export async serve as down(knex: Knex): Promise

go back knex.schema.dropTable(tableName);

}” data-lang=”software/typescript”>

import { Knex } from "knex";

const tableName="posts";

export async serve as up(knex: Knex): Promise<void> {

go back knex.schema.createTable(tableName, (desk: Knex.TableBuilder) => {

desk.increments('identity');

desk.string('identify').notNullable();

desk.string('content material').notNullable();

desk.integer('user_id').unsigned().notNullable();

desk.international('user_id').references('identity').inTable('customers').onDelete('CASCADE');

desk.timestamps(true, true);

});

}

export async serve as down(knex: Knex): Promise<void> {

go back knex.schema.dropTable(tableName);

}

Knex Migration Record for Posts

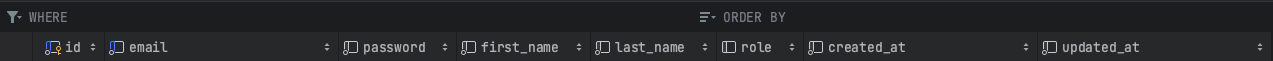

After environment issues up, continue by way of operating npx knex migrate:newest to use the most recent migrations. As soon as this step is entire, you are all set to check up on the database desk the use of your favourite GUI instrument:

Created Desk by way of Knex Migration

We’re able for seeding our tables. Run npx knex seed:make 01-users with the next content material:

look ahead to knex(tableName).del();

const customers: Consumer[] = […Array(10).keys()].map(key => ({

e mail: faker.web.e mail().toLowerCase(),

first_name: faker.individual.firstName(),

last_name: faker.individual.lastName(),

position: Position.Consumer,

}));

look ahead to knex(tableName).insert(customers.map(consumer => ({ …consumer, password: ‘test_password’ })));

}” data-lang=”software/typescript”>

import { Knex } from 'knex';

import { faker } from '@faker-js/faker';

import { Consumer, Position } from '../../src/@varieties';

const tableName="customers";

export async serve as seed(knex: Knex): Promise<void> {

look ahead to knex(tableName).del();

const customers: Consumer[] = [...Array(10).keys()].map(key => ({

e mail: faker.web.e mail().toLowerCase(),

first_name: faker.individual.firstName(),

last_name: faker.individual.lastName(),

position: Position.Consumer,

}));

look ahead to knex(tableName).insert(customers.map(consumer => ({ ...consumer, password: 'test_password' })));

}Knex Seed Customers

And for posts run npx knex seed:make 02-posts with the content material:

look ahead to knex(tableName).del();

const usersIds: Array<{ identity: quantity }> = look ahead to knex(‘customers’).make a choice(‘identity’);

const posts: Publish[] = [];

usersIds.forEach(({ identity: user_id }) => {

const randomAmount = Math.ground(Math.random() * 10) + 1;

for (let i = 0; i < randomAmount; i++) {

posts.push({

identify: faker.lorem.phrases(3),

content material: faker.lorem.paragraph(),

user_id,

});

}

});

look ahead to knex(tableName).insert(posts);

}” data-lang=”software/typescript”>

import { Knex } from 'knex';

import { faker } from '@faker-js/faker';

import sort { Publish } from '../../src/@varieties';

const tableName="posts";

export async serve as seed(knex: Knex): Promise<void> {

look ahead to knex(tableName).del();

const usersIds: Array<{ identity: quantity }> = look ahead to knex('customers').make a choice('identity');

const posts: Publish[] = [];

usersIds.forEach(({ identity: user_id }) => {

const randomAmount = Math.ground(Math.random() * 10) + 1;

for (let i = 0; i < randomAmount; i++) {

posts.push({

identify: faker.lorem.phrases(3),

content material: faker.lorem.paragraph(),

user_id,

});

}

});

look ahead to knex(tableName).insert(posts);

}Knex Seed Posts

The naming conference we’ve followed for our seed information, 01-users and 02-posts, is intentional. This sequential naming guarantees the right kind order of seeding operations. In particular, it prevents posts from being seeded ahead of customers, which is very important to take care of relational integrity within the database.

Fashions and Exams

As the root of our database is now firmly established with migrations and seeds, it’s time to shift our focal point to every other important element of database-driven programs: fashions. Fashions act because the spine of our software, representing the information constructions and relationships inside our database. They supply an abstraction layer, permitting us to have interaction with our records in an object-oriented means. On this phase, we’ll delve into the introduction and intricacies of fashions, making sure a unbroken bridge between our software common sense and saved records.

Within the src/fashions/Fashion/index.ts listing, we’re going to identify the foundational setup:

import { database } from 'root/database';

export summary elegance Fashion {

secure static tableName?: string;

personal static get desk() {

if (!this.tableName) {

throw new Error('The desk title will have to be outlined for the style.');

}

go back database(this.tableName);

}

}Preliminary Setup for Fashion

As an instance learn how to leverage our Fashion elegance, let’s imagine the next instance the use of TestModel:

elegance TestModel extends Fashion {

secure static tableName="test_table";

}Utilization of Prolonged Fashion

This subclass, TestModel, extends our base Fashion and specifies the database desk it corresponds to as 'test_table'.

To really harness the potential for our Fashion elegance, we want to equip it with strategies that may seamlessly have interaction with our database. Those strategies would encapsulate not unusual database operations, making our interactions now not best extra intuitive but in addition extra environment friendly. Let’s delve into and give a boost to our Fashion elegance with some very important strategies:

import { database } from 'root/database';

export summary elegance Fashion {

secure static tableName?: string;

personal static get desk() {

if (!this.tableName) {

throw new Error('The desk title will have to be outlined for the style.');

}

go back database(this.tableName);

}

secure static async insert<Payload>(records: Payload): Promise<{

identity: quantity;

}> {

const [result] = look ahead to this.desk.insert(records).returning('identity');

go back consequence;

}

secure static async findOneById<Consequence>(identity: quantity): Promise<Consequence> {

go back this.desk.the place('identity', identity).make a choice("*").first();

}

secure static async findAll<Merchandise>(): Promise<Merchandise[]> {

go back this.desk.make a choice('*');

}

}Very important Strategies of Fashion

Within the elegance, we’ve added how one can take care of the insertion of information (insert), fetch a unmarried access in line with its ID (findOneById), and retrieve all pieces (findAll). Those foundational strategies will streamline our database interactions, paving the way in which for extra complicated operations as we increase our software.

How must we check its capability? By means of crafting an integration check for our Fashion. Let’s dive into it.

Sure, I’ll use Jest for integration assessments as I’ve the similar instrument and for unit assessments. In fact, Jest is essentially referred to as a unit trying out framework, but it surely’s flexible sufficient for use for integration assessments as neatly.

Make certain that your Jest configuration aligns with the next:

import sort { Config } from '@jest/varieties';

const config: Config.InitialOptions = {

clearMocks: true,

preset: 'ts-jest',

testEnvironment: 'node',

coverageDirectory: 'protection',

verbose: true,

modulePaths: ['./'],

turn into: {

'^.+.ts?$': 'ts-jest',

},

testRegex: '.*.(spec|integration.spec).ts$',

testPathIgnorePatterns: ['\node_modules\'],

moduleNameMapper: {

'^root/(.*)$': '<rootDir>/$1',

'^src/(.*)$': '<rootDir>/src/$1',

},

};

export default config;Jest Configurations

Inside the Fashion listing, create a report named Fashion.integration.spec.ts.

beforeAll(async () => {

procedure.env.NODE_ENV = ‘check’;

look ahead to database.schema.createTable(testTableName, desk => {

desk.increments(‘identity’).number one();

desk.string(‘title’);

});

});

afterEach(async () => {

look ahead to database(testTableName).del();

});

afterAll(async () => {

look ahead to database.schema.dropTable(testTableName);

look ahead to database.wreck();

});

it(‘must insert a row and fetch it’, async () => {

look ahead to TestModel.insert

const allResults = look ahead to TestModel.findAll

be expecting(allResults.period).toEqual(1);

be expecting(allResults[0].title).toEqual(‘TestName’);

});

it(‘must insert a row and fetch it by way of identity’, async () => {

const { identity } = look ahead to TestModel.insert

const consequence = look ahead to TestModel.findOneById

be expecting(consequence.title).toEqual(‘TestName’);

});

});” data-lang=”software/typescript”>

import { Fashion } from '.';

import { database } from 'root/database';

const testTableName="test_table";

elegance TestModel extends Fashion {

secure static tableName = testTableName;

}

sort TestType = {

identity: quantity;

title: string;

};

describe('Fashion', () => {

beforeAll(async () => {

procedure.env.NODE_ENV = 'check';

look ahead to database.schema.createTable(testTableName, desk => {

desk.increments('identity').number one();

desk.string('title');

});

});

afterEach(async () => {

look ahead to database(testTableName).del();

});

afterAll(async () => {

look ahead to database.schema.dropTable(testTableName);

look ahead to database.wreck();

});

it('must insert a row and fetch it', async () => {

look ahead to TestModel.insert<Put out of your mind<TestType, 'identity'>>({ title: 'TestName' });

const allResults = look ahead to TestModel.findAll<TestType>();

be expecting(allResults.period).toEqual(1);

be expecting(allResults[0].title).toEqual('TestName');

});

it('must insert a row and fetch it by way of identity', async () => {

const { identity } = look ahead to TestModel.insert<Put out of your mind<TestType, 'identity'>>({ title: 'TestName' });

const consequence = look ahead to TestModel.findOneById<TestType>(identity);

be expecting(consequence.title).toEqual('TestName');

});

});

Fashion Integration Check

Within the check, it showcased a capability to seamlessly have interaction with a database. I have designed a specialised TestModel elegance that inherits from our foundational, using test_table as its designated check desk. During the assessments, I am emphasizing the style’s core purposes: putting records and due to this fact retrieving it, be it in its entirety or by means of particular IDs. To take care of a pristine trying out surroundings, I have integrated mechanisms to arrange the desk previous to trying out, cleanse it submit each and every check, and in the end dismantle it as soon as all assessments are concluded.

Right here leveraged the Template Manner design development. This development is characterised by way of having a base elegance (incessantly summary) with outlined strategies like a template, which will then be overridden or prolonged by way of derived lessons.

Following the development you’ve established with the Fashion elegance, we will create a UserModel elegance to increase and specialize for user-specific habits.

In our Fashion exchange personal to secure for reusability in sub-classes.

secure static tableName?: string;After which create UserModel in src/fashions/UserModel/index.ts like we did for the bottomFashion with the next content material:

go back this.desk.the place(‘e mail’, e mail).make a choice(‘*’).first();

}

}” data-lang=”software/typescript”>

import { Fashion } from 'src/fashions/Fashion';

import { Position } from 'src/@varieties';

sort UserType = {

identity: quantity;

e mail: string;

first_name: string;

last_name: string;

position: Position;

}

elegance UserModel extends Fashion {

secure static tableName="customers";

public static async findByEmail(e mail: string): Promise<UserType | null> {

go back this.desk.the place('e mail', e mail).make a choice('*').first();

}

}UserModel elegance

To behavior rigorous trying out, we’d like a devoted check database the place desk migrations and deletions can happen. Recall our configuration within the knexfile, the place we applied the similar database title throughout environments with this line:

export default Object.fromEntries(environments.map((env: string) => [env, commonConfig]));To each increase and check databases, we will have to modify the docker-composeconfiguration for database introduction and make sure the right kind connection settings. The vital connection changes must even be made within the knexfile.

// ... configs of knexfile.ts

export default {

building: {

...commonConfig,

},

check: {

...commonConfig,

connection: {

...connection,

database: procedure.env.DB_NAME_TEST as string,

}

}

}knexfile.ts

With the relationship established, environment procedure.env.NODE_ENV to “check” guarantees that we hook up with the precise database. Subsequent, let’s craft a check for the UserModel.

import { UserModel, UserType } from '.';

import { database } from 'root/database';

import { faker } from '@faker-js/faker';

import { Position } from 'src/@varieties';

const test_user: Put out of your mind<UserType, 'identity'> = {

e mail: faker.web.e mail().toLowerCase(),

first_name: faker.individual.firstName(),

last_name: faker.individual.lastName(),

password: 'test_password',

position: Position.Consumer,

};

describe('UserModel', () => {

beforeAll(async () => {

procedure.env.NODE_ENV = 'check';

look ahead to database.migrate.newest();

});

afterEach(async () => {

look ahead to database(UserModel.tableName).del();

});

afterAll(async () => {

look ahead to database.migrate.rollback();

look ahead to database.wreck();

});

it('must insert and retrieve consumer', async () => {

look ahead to UserModel.insert<typeof test_user>(test_user);

const allResults = look ahead to UserModel.findAll<UserType>();

be expecting(allResults.period).toEqual(1);

be expecting(allResults[0].first_name).toEqual(test_user.first_name);

});

it('must insert consumer and retrieve by way of e mail', async () => {

const { identity } = look ahead to UserModel.insert<typeof test_user>(test_user);

const consequence = look ahead to UserModel.findOneById<UserType>(identity);

be expecting(consequence.first_name).toEqual(test_user.first_name);

});

});UserModel Integration Check

First of all, this mock consumer is inserted into the database, and then a retrieval operation guarantees that the consumer used to be effectively saved, as verified by way of matching their first title. In every other phase of the check, as soon as the mock consumer reveals its method into the database, we carry out a retrieval the use of the consumer’s ID, additional confirming the integrity of our insertion mechanism. During the trying out procedure, it’s an important to take care of an remoted surroundings. To this finish, ahead of diving into the assessments, the database is migrated to the newest construction. Publish each and every check, the consumer entries are cleared to keep away from any records residue. After all, because the assessments wrap up, a migration rollback cleans the slate, and the database connection gracefully closes.

The usage of this manner, we will successfully prolong each and every of our fashions to take care of exact database interactions.

if (!user_id) go back [];

go back this.desk.the place(‘user_id’, user_id).make a choice(‘*’);

}

}” data-lang=”software/typescript”>

import { Fashion } from 'src/fashions/Fashion';

export sort PostType = {

identity: quantity;

identify: string;

content material: string;

user_id: quantity;

};

export elegance PostModel extends Fashion {

public static tableName="posts";

secure static async findAllByUserId(user_id: quantity): Promise<PostType[]> {

if (!user_id) go back [];

go back this.desk.the place('user_id', user_id).make a choice('*');

}

}PostModel.ts

The PostModel particularly goals the ‘posts’ desk within the database, as indicated by way of the static tableName assets. Additionally, the category introduces a singular means, findAllByUserId, designed to fetch all posts related to a particular consumer. This technique exams the user_id characteristic, making sure posts are best fetched when a sound consumer ID is equipped.

If vital to have a generic means for updating, we will upload an extra means within the base Fashion:

public static async updateOneById<Payload>(

identity: quantity,

records: Payload

): Promise<{

identity: quantity;

} | null> {

const [result] = look ahead to this.desk.the place({ identity }).replace(records).returning('identity');

go back consequence;

}Replace by way of identity in base Fashion

So, this technique updateOneById may also be helpful for all style sub-classes.

Conclusion

In wrapping up, it’s obtrusive {that a} modular manner now not best simplifies our building procedure but in addition complements the maintainability and scalability of our programs. By means of compartmentalizing common sense into distinct fashions, we set a transparent trail for long run expansion, making sure that each and every module may also be delicate or expanded upon with out inflicting disruptions in different places.

Those fashions aren’t simply theoretical constructs — they’re sensible equipment, without problems pluggable into controllers, making sure streamlined and reusable code constructions. So, as we adventure via, let’s savor the transformative energy of modularity, and spot firsthand its pivotal position in shaping forward-thinking programs.

I welcome your comments and am keen to have interaction in discussions on any side.

References

[ad_2]