[ad_1]

As a pc graphics and programming languages geek, I’m extremely joyful to have discovered myself running on a number of GPU compilers previously two years. This started in 2021 after I began to give a contribution to taichi, a Python library that compiles Python purposes into GPU kernels in CUDA, Steel, or Vulkan. In a while, I joined Meta and got to work on SparkSL, which is the shader language that powers cross-platform GPU programming for AR results on Instagram and Fb. With the exception of non-public excitement, I’ve all the time believed, or a minimum of was hoping, that those frameworks are in reality slightly helpful; they make GPU programming extra out there to non-experts, empowering other people to create interesting graphics content material with no need to grasp advanced GPU ideas.

In my newest installment of compilers, I became my eyes to WebGPU — the next-generation graphics API for the internet. WebGPU guarantees to convey high-performance graphics by the use of low CPU overhead and specific GPU regulate, aligning with the craze began via Vulkan and D3D12 some seven years in the past. Identical to Vulkan, the functionality advantages of WebGPU come at the price of a steep studying curve. Even though I am assured that this would possibly not forestall gifted programmers all over the world from construction wonderful content material with WebGPU, I sought after to supply other people with a technique to play with WebGPU with no need to confront its complexity. That is how taichi.js got here to be.

Below the taichi.js programming type, programmers would not have to explanation why about WebGPU ideas comparable to gadgets, command queues, bind teams, and so forth. As a substitute, they write simple Javascript purposes, and the compiler interprets the ones purposes into WebGPU compute or render pipelines. Which means any individual can write WebGPU code by the use of taichi.js, so long as they’re acquainted with fundamental Javascript syntax.

The rest of this article is going to show the programming type of taichi.js by the use of a “Sport of Existence” program. As you’ll see, with not up to 100 traces of code, we will be able to create an totally parallel WebGPU program containing 3 GPU compute pipelines plus a render pipeline. The overall supply code of the demo can also be discovered right here, and if you wish to play with the code with no need to set-up any native environments, move to this web page.

The Sport

The Sport of Existence is a vintage instance of a cell automaton, a machine of cells that evolve over the years in keeping with easy laws. It used to be invented via the mathematician John Conway in 1970 and has since transform a favourite of pc scientists and mathematicians alike. The sport is performed on a two-dimensional grid, the place every mobile can also be both alive or lifeless. The foundations for the sport are easy:

- If a dwelling mobile has fewer than two or greater than 3 dwelling neighbors, it dies.

- If a lifeless mobile has precisely 3 dwelling neighbors, it turns into alive.

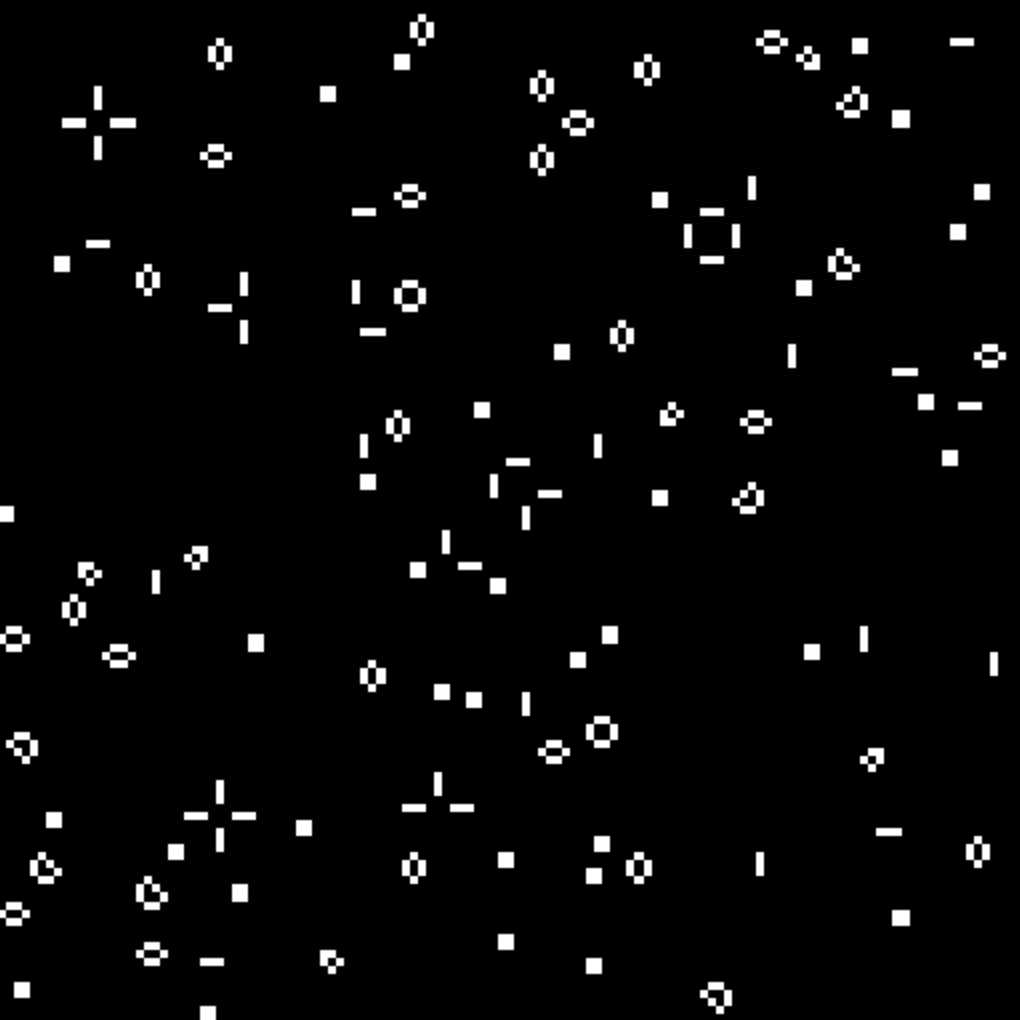

Regardless of its simplicity, the Sport of Existence can show off sudden conduct. Ranging from any random preliminary state, the sport regularly converges to a state the place a couple of patterns are dominant as though those are “species” which survived via evolution.

Simulation

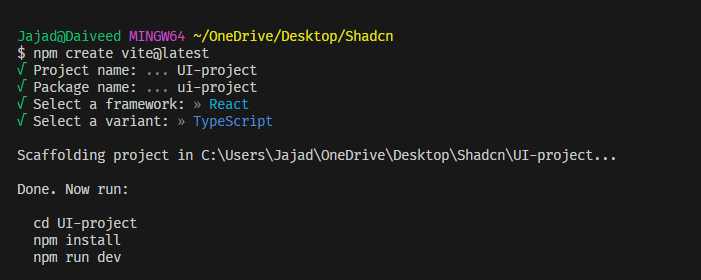

Let’s dive into the Sport of Existence implementation the use of taichi.js. Initially, we import the taichi.js library underneath the shorthand ti and outline an async major() serve as, which can comprise all of our good judgment. Inside of major(), we commence via calling ti.init(), which initializes the library and its WebGPU contexts.

import * as ti from "trail/to/taichi.js"

let major = async () => {

watch for ti.init();

...

};

major()Following ti.init(), let’s outline the information buildings wanted via the “Sport of Existence” simulation:

let N = 128;

let liveness = ti.box(ti.i32, [N, N])

let numNeighbors = ti.box(ti.i32, [N, N])

ti.addToKernelScope({ N, liveness, numNeighbors });Right here, we outlined two variables, liveness, and numNeighbors, either one of that are ti.boxs. In taichi.js, a “box” is basically an n-dimensional array, whose dimensionality is equipped within the 2d argument to ti.box(). The part form of the array is outlined within the first argument. On this case, we have now ti.i32, indicating 32-bit integers. On the other hand, box parts can be different extra advanced varieties, together with vectors, matrices, or even buildings.

The following line of code, ti.addToKernelScope({...}), guarantees that the variables N, liveness, and numNeighbors are visual in taichi.js “kernel”s, that are GPU compute and/or render pipelines, outlined within the type of Javascript purposes. For instance, the next init kernel is used to populate our grid cells with preliminary liveness vales, the place every mobile has a 20% probability of being alive to start with:

let init = ti.kernel(() => {

for (let I of ti.ndrange(N, N)) {

liveness[I] = 0

let f = ti.random()

if (f < 0.2) {

liveness[I] = 1

}

}

})

init()The init() kernel is created via calling ti.kernel() with a Javascript lambda because the argument. Below the hood, taichi.js will have a look at the JavaScript string illustration of this lambda and bring together its good judgment into WebGPU code. Right here, the lambda accommodates a for-loop, whose loop index I iterates via ti.ndrange(N, N). Which means I will take NxN other values, starting from [0, 0] to [N-1, N-1].

Right here comes the paranormal phase — in taichi.js, the entire top-level for-loops within the kernel can be parallelized. Extra in particular, for every conceivable price of the loop index, taichi.js will allocate one WebGPU compute shader thread to execute it. On this case, we commit one GPU thread to every mobile in our “Sport of Existence” simulation, initializing it to a random liveness state. The randomness comes from a ti.random() serve as, which is among the the various purposes equipped within the taichi.js library for kernel use. A complete record of those integrated utilities is to be had right here within the taichi.js documentation.

Having created the preliminary state of the sport, let’s transfer directly to outline how the sport evolves. Those are the 2 taichi.js kernels defining this evolution:

let countNeighbors = ti.kernel(() => {

for (let I of ti.ndrange(N, N)) {

let neighbors = 0

for (let delta of ti.ndrange(3, 3)) {

let J = (I + delta - 1) % N

if ((J.x != I.x || J.y != I.y) && liveness[J] == 1) {

neighbors = neighbors + 1;

}

}

numNeighbors[I] = neighbors

}

});

let updateLiveness = ti.kernel(() => {

for (let I of ti.ndrange(N, N)) {

let neighbors = numNeighbors[I]

if (liveness[I] == 1) {

if (neighbors < 2 || neighbors > 3) {

liveness[I] = 0;

}

}

else {

if (neighbors == 3) {

liveness[I] = 1;

}

}

}

})Identical because the init() kernel we noticed sooner than, those two kernels even have top-level for loops iterating over each and every grid mobile, that are parallelized via the compiler. In countNeighbors(), for every mobile, we have a look at the 8 neighboring cells and rely what number of of those neighbors are “alive.” The volume of reside neighbors is saved into the numNeighbors box. Understand that once iterating via neighbors, the loop for (let delta of ti.ndrange(3, 3)) {...} isn’t parallelized, as a result of it isn’t a top-level loop. The loop index delta levels from [0, 0] to [2, 2] and is used to offset the unique mobile index I. We steer clear of out-of-bounds accesses via taking a modulo on N. (For the topologically-inclined reader, this necessarily method the sport has toroidal boundary stipulations).

Having counted the quantity of neighbors for every mobile, we transfer directly to replace the their liveness states within the updateLiveness() kernel. This can be a easy subject of studying the liveness state of every mobile and its present quantity of reside neighbors and writing again a brand new liveness price in keeping with the foundations of the sport. As same old, this procedure applies to all cells in parallel.

This necessarily concludes the implementation of the sport’s simulation good judgment. Subsequent, we will be able to see how one can outline a WebGPU render pipeline to attract the sport’s evolution onto a webpage.

Rendering

Writing rendering code in taichi.js is reasonably extra concerned than writing general-purpose compute kernels, and it does require some figuring out of vertex shaders, fragment shaders, and rasterization pipelines on the whole. On the other hand, you’ll to find that the straightforward programming type of taichi.js makes those ideas extraordinarily simple to paintings with and explanation why about.

Ahead of drawing anything else, we want get entry to to a work of canvas that we’re drawing onto. Assuming {that a} canvas named result_canvas exists within the HTML, the next traces of code create a ti.CanvasTexture object, which represents a work of texture that may be rendered onto via a taichi.js render pipeline.

let htmlCanvas = report.getElementById('result_canvas');

htmlCanvas.width = 512;

htmlCanvas.top = 512;

let renderTarget = ti.canvasTexture(htmlCanvas);On our canvas, we will be able to render a sq., and we will be able to draw the Sport’s 2D grid onto this sq.. In GPUs, geometries to be rendered are represented within the type of triangles. On this case, the sq. that we’re seeking to render can be represented as two triangles. Those two triangles are outlined in a ti.box, which retailer the coordinates of every of the six vertices of the 2 triangles:

let vertices = ti.box(ti.varieties.vector(ti.f32, 2), [6]);

watch for vertices.fromArray([

[-1, -1],

[1, -1],

[-1, 1],

[1, -1],

[1, 1],

[-1, 1],

]);As we did with the liveness and numNeighbors fields, we want to explicitly claim the renderTarget and vertices variables to be visual in GPU kernels in taichi.js:

ti.addToKernelScope({ vertices, renderTarget });Now, we have now the entire knowledge we want to enforce our render pipeline. This is the implementation of the pipeline itself:

let render = ti.kernel(() => {

ti.clearColor(renderTarget, [0.0, 0.0, 0.0, 1.0]);

for (let v of ti.inputVertices(vertices)) {

ti.outputPosition([v.x, v.y, 0.0, 1.0]);

ti.outputVertex(v);

}

for (let f of ti.inputFragments()) {

let coord = (f + 1) / 2.0;

let texelIndex = ti.i32(coord * (liveness.dimensions - 1));

let reside = ti.f32(liveness[texelIndex]);

ti.outputColor(renderTarget, [live, live, live, 1.0]);

}

});Throughout the render() kernel, we commence via clearing the renderTarget with an all-black colour, represented in RGBA as [0.0, 0.0, 0.0, 1.0].

Subsequent, we outline two top-level for-loops, which, as you recognize, are loops which might be parallelized in WebGPU. On the other hand, in contrast to the former loops the place we iterate over ti.ndrange items, those loops iterate over ti.inputVertices(vertices) and ti.inputFragments(), respectively. This means that those loops can be compiled into WebGPU “vertex shaders” and “fragment shaders,” which paintings in combination as a render pipeline.

The vertex shader has two tasks:

-

For every triangle vertex, compute its ultimate location at the display (or, extra as it should be, its “Clip House” coordinates). In a three-D rendering pipeline, this may increasingly most often contain a number of matrix multiplications that transforms the vertex’s type coordinates into global house, after which into digicam house, after which after all into “Clip House.” On the other hand, for our easy 2D sq., the enter coordinates of the vertices are already at their right kind values in clip house in order that we will be able to steer clear of all of that. All we need to do is append a set

zprice of 0.0 and a setwprice of1.0(do not be disturbed if you do not know what the ones are — no longer necessary right here!).ti.outputPosition([v.x, v.y, 0.0, 1.0]); -

For every vertex, generate knowledge to be interpolated after which handed into the fragment shader. In a render pipeline, after the vertex shader is performed, a integrated procedure referred to as “Rasterization” is performed on the entire triangles. This can be a hardware-accelerated procedure which computes, for every triangle, which pixels are coated via this triangle. Those pixels are sometimes called “fragments.” For every triangle, the programmer is permitted to generate further knowledge at every of the 3 vertices, which can be interpolated all the way through the rasterization level. For every fragment within the pixel, its corresponding fragment shader thread will obtain the interpolated values in keeping with its location throughout the triangle.

In our case, the fragment shader best wishes to understand the site of the fragment throughout the 2D sq. so it might probably fetch the corresponding liveness values of the sport. For this goal, it suffices to move the 2D vertex coordinate into the rasterizer, which means that the fragment shader will obtain the interpolated 2D location of the pixel itself:

ti.outputVertex(v);

Transferring directly to the fragment shader:

for (let f of ti.inputFragments()) {

let coord = (f + 1) / 2.0;

let cellIndex = ti.i32(coord * (liveness.dimensions - 1));

let reside = ti.f32(liveness[cellIndex]);

ti.outputColor(renderTarget, [live, live, live, 1.0]);

}The worth f is the interpolated pixel location passed-on from the vertex shader. The use of this price, the fragment shader will look-up the liveness state of the mobile within the recreation which covers this pixel. That is carried out via first changing the pixel coordinates f into the [0, 0] ~ [1, 1] vary and storing this coordinate into the coord variable. That is then multiplied with the size of the liveness box, which produces the index of the protecting mobile. In any case, we fetch the reside price of this mobile, which is 0 whether it is lifeless and 1 whether it is alive. In any case, we output the RGBA price of this pixel onto the renderTarget, the place the R,G,B elements are all equivalent to reside, and the An element is the same as 1, for complete opacity.

With the render pipeline outlined, all that is left is to position the whole thing in combination via calling the simulation kernels and the render pipeline each and every body:

async serve as body() {

countNeighbors()

updateLiveness()

watch for render();

requestAnimationFrame(body);

}

watch for body();And that’s the reason it! We’ve finished a WebGPU-based “Sport of Existence” implementation in taichi.js. In the event you run this system, you must see an animation the place 128×128 cells evolve for round 1400 generations sooner than converging to a couple of species of stabilized organisms.

Workouts

I’m hoping you discovered this demo attention-grabbing! In the event you did, then I’ve a couple of further workout routines and questions that I invite you to experiment with and take into accounts. (Via the way in which, for briefly experimenting with the code, move to this web page.)

- [Easy] Upload a FPS counter to the demo! What FPS price are you able to download with the present surroundings the place

N = 128? Check out expanding the price ofNand notice how the framerate adjustments. Would you have the ability to write a vanilla Javascript program that obtains this framerate with outtaichi.jsor WebGPU? - [Medium] What would occur if we merge

countNeighbors()andupdateLiveness()right into a unmarried kernel and stay theneighborscounter as an area variable? Would this system nonetheless paintings appropriately all the time? - [Hard] In

taichi.js, ati.kernel(..)all the time produces anasyncserve as, without reference to whether or not it accommodates compute pipelines or render pipelines. If you must bet, what’s the which means of thisasync-ness? And what’s the which means of callingwatch foron thoseasynccalls? In any case, within thebodyserve as outlined above, why did we putwatch forjust for therender()serve as, however no longer the opposite two?

The remaining two questions are particularly attention-grabbing, as they touches onto the internal workings of the compiler and runtime of the taichi.js framework, in addition to the rules of GPU programming. Let me know your resolution!

Sources

After all, this Sport of Existence instance best scratches the outside of what you’ll be able to do with taichi.js. From real-time fluid simulations to bodily founded renderers, there are would possibly different taichi.js techniques so that you can play with, and much more so that you can write your self. For extra examples and studying sources, take a look at:

Satisfied coding!

[ad_2]