[ad_1]

Welcome again to this collection on importing information to the internet. You don’t want to learn the former posts to observe together with this one, however the entire collection seems like this:

- Add information with HTML

- Add information with JavaScript

- Obtain uploads in Node.js (Nuxt.js)

- Optimize garage prices with Object Garage

- Optimize efficiency with a CDN

- Add safety & malware coverage

The former posts coated importing information the use of HTML and JavaScript. The stairs required:

Nowadays, we’re going to the backend to obtain the ones multipart/form-data requests and get entry to the binary information from the ones information.

Some Background

Lots of the ideas on this educational will have to extensively observe throughout frameworks, runtimes, and languages, however the code examples will probably be extra particular.

I’ll be operating inside a Nuxt.js mission that runs in a Node.js setting. Nuxt has some particular techniques of defining API routes which require calling a world serve as known as defineEventHandler.

/**

* @see https://nuxt.com/doctors/information/directory-structure/server

* @see https://nuxt.com/doctors/information/ideas/server-engine

* @see https://github.com/unjs/h3

*/

export default defineEventHandler((match) => {

go back { good enough: true };

});The match argument supplies get entry to to paintings without delay with the underlying Node.js request object (a.okay.a. IncomingMessage) thru match.node.req. So we will be able to write our Node-specific code in an abstraction, like a serve as known as doSomethingWithNodeRequest that receives this Node request object and does one thing with it.

export default defineEventHandler((match) => {

const nodeRequestObject = match.node.req;

doSomethingWithNodeRequest(match.node.req);

go back { good enough: true };

});

/**

* @param {import('http').IncomingMessage} req

*/

serve as doSomethingWithNodeRequest(req) {

// Don't particular stuff right here

}Operating without delay with Node on this approach manner the code and ideas will have to observe irrespective of no matter higher-level framework you’re operating with. In the end, end issues up operating in Nuxt.js.

Coping with multipart/form-data in Node.js

On this segment, we’ll dive into some low-level ideas which can be excellent to know, however no longer strictly important. Be happy to skip this segment if you’re already accustomed to chunks and streams and buffers in Node.js.

Importing a dossier calls for sending a multipart/form-data request. In those requests, the browser will break up the knowledge into little “chunks” and ship them in the course of the connection, one bite at a time. That is important as a result of information may also be too huge to ship in as one huge payload.

Chunks of knowledge being despatched over the years make up what’s known as a “circulation“. Streams are more or less onerous to know the primary time round, a minimum of for me. They deserve a complete article (or many) on their very own, so I’ll percentage internet.dev’s superb information in case you wish to have to be informed extra.

Principally, a circulation is type of like a conveyor belt of knowledge, the place each and every bite may also be processed because it is available in. On the subject of an HTTP request, the backend will obtain portions of the request, one bit at a time.

Node.js supplies us with an match handler API in the course of the request object’s on way, which permits us to hear “information” occasions as they’re streamed into the backend.

/**

* @param {import('http').IncomingMessage} req

*/

serve as doSomethingWithNodeRequest(req) {

req.on("information", (information) => {

console.log(information);

}

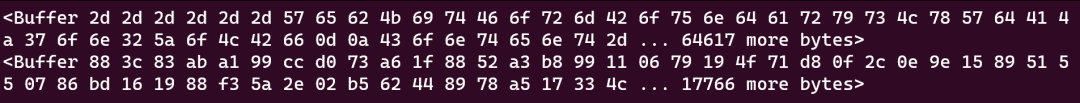

}For instance, after I add a photograph of Nugget creating a adorable yawny face, then have a look at the server’s console, I’ll see some bizarre issues that appear to be this:

Those two items of garbled nonsense are known as “buffers” they usually constitute the 2 chunks of knowledge that made up the request circulation containing the lovable photograph of Nugget.

A buffer is a garage in bodily reminiscence used to quickly retailer information whilst it’s being transferred from one position to some other.

Buffers are some other bizarre, low-level idea I’ve to provide an explanation for when speaking about operating information in JavaScript. JavaScript doesn’t paintings without delay on binary information, so we get to be informed about buffers. It’s additionally OK if those ideas nonetheless really feel a bit of imprecise. Figuring out the whole lot totally isn’t the essential section at this time, and as you proceed to be informed about dossier transfers, you’ll achieve a greater wisdom of the way it all works in combination.

Operating with one partial bite of knowledge isn’t tremendous helpful. What we will be able to do as an alternative is rewrite our serve as into one thing we will be able to paintings with:

- Go back a

Promiseto make the async syntax simple to paintings with. - Supply an

Arrayto retailer the chunks of knowledge to make use of afterward. - Concentrate for the “information” match and upload the chunks to our assortment as they come.

- Concentrate to the “finish” match and convert the chunks into one thing we will be able to paintings with.

- Unravel the

Promisewith the general request payload. - We will have to additionally take into account to deal with “error” occasions.

/**

* @param {import('http').IncomingMessage} req

*/

serve as doSomethingWithNodeRequest(req) {

go back new Promise((unravel, reject) => {

/** @kind {any[]} */

const chunks = [];

req.on('information', (information) => {

chunks.push(information);

});

req.on('finish', () => {

const payload = Buffer.concat(chunks).toString()

unravel(payload);

});

req.on('error', reject);

});

}And each and every time that the request receives some information, it pushes that information into the array of chunks.

So with that serve as arrange, we will be able to in fact look forward to that returned Promise till the request has completed receiving all of the information from the request circulation, and log the resolved price to the console.

export default defineEventHandler((match) => {

const nodeRequestObject = match.node.req;

const frame = look forward to doSomethingWithNodeRequest(match.node.req);

console.log(frame)

go back { good enough: true };

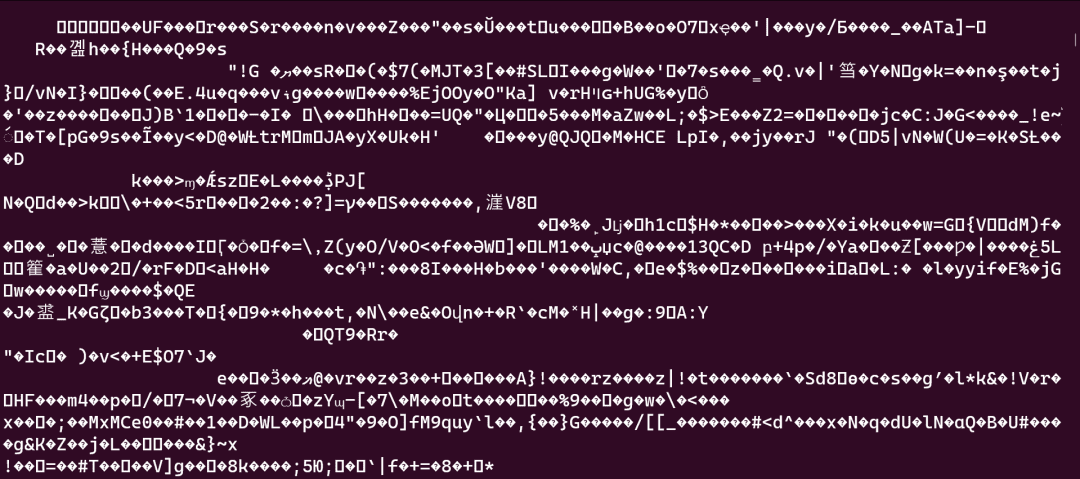

});That is the request frame. Isn’t it stunning?

Should you add a picture dossier, it’ll almost certainly appear to be an alien has hacked your laptop. Don’t fear, it hasn’t. That’s actually what the textual content contents of that dossier appear to be. You’ll even check out opening up a picture dossier in a elementary textual content editor and notice the similar factor.

If I add a extra elementary instance, like a .txt dossier with some undeniable textual content in it, the frame would possibly appear to be this:

Content material-Disposition: form-data; call="dossier"; filename="dear-nugget.txt"

Content material-Sort: textual content/undeniable

I like you!

------WebKitFormBoundary4Ay52hDeKB5x2vXP--Understand that the request is damaged up into other sections for each and every type box. The sections are separated by means of the “type boundary”, which the browser will inject by means of default. I’ll skip going into extra main points, so if you wish to learn extra, take a look at Content material-Disposition on MDN. The essential factor to understand is that multipart/form-data requests are a lot more complicated than simply key/price pairs.

Maximum server frameworks supply integrated gear to get entry to the frame of a request. So we’ve in fact reinvented the wheel. For instance, Nuxt supplies a world readBody serve as. So we may have achieved the similar factor with out writing our personal code:

export default defineEventHandler((match) => {

const nodeRequestObject = match.node.req;

const frame = look forward to readBody(match.node.req);

console.log(frame)

go back { good enough: true };

});This works tremendous for different content material sorts, however for multipart/form-data, it has problems. All of the frame of the request is being learn into reminiscence as one large string of textual content. This contains the Content material-Disposition knowledge, the shape barriers, and the shape fields and values. By no means thoughts the truth that the information aren’t even being written to disk. The large factor right here is that if an overly huge dossier is uploaded, it might eat all of the reminiscence of the applying and purpose it to crash.

The answer is, as soon as once more, operating with streams.

When our server receives a piece of knowledge from the request circulation, as an alternative of storing it in reminiscence, we will be able to pipe it to another circulation. In particular, we will be able to ship it to a circulation that writes information to the dossier machine the use of createWriteStream. Because the chunks are available from the request, that information will get written to the dossier machine, then launched from reminiscence.

That’s about as a long way down as I need to pass into the low-level ideas. Let’s return as much as fixing the issue with out reinventing the wheel.

Use a library to circulation information onto disk

Almost certainly my absolute best recommendation for dealing with dossier uploads is to succeed in for a library that does all this be just right for you:

- Parse

multipart/form-datarequests - Separate the information from the opposite type fields

- Circulation the dossier information into the dossier machine

- Come up with the shape box information in addition to helpful information in regards to the information

Nowadays, I’m going to be the use of this library known as ambitious. You’ll set up it with npm set up ambitious, then import it into your mission.

import ambitious from 'ambitious';Ambitious works without delay with the Node request object, which we comfortably already grabbed from the Nuxt match (“Wow, what wonderful foresight!!!” 🤩).

So we will be able to alter our doSomethingWithNodeRequest serve as to make use of ambitious as an alternative. It will have to nonetheless go back a promise as a result of ambitious makes use of callbacks, however guarantees are nicer to paintings with. Another way, we will be able to most commonly substitute the contents of the serve as with ambitious. We’ll want to create an impressive example, use it to parse the request object, and so long as there isn’t an error, we will be able to unravel the promise with a unmarried object that incorporates each the shape fields and the information.

/**

* @param {import('http').IncomingMessage} req

*/

serve as doSomethingWithNodeRequest(req) {

go back new Promise((unravel, reject) => {

/** @see https://github.com/node-formidable/ambitious/ */

const type = ambitious({ multiples: true })

type.parse(req, (error, fields, information) => {

if (error) {

reject(error);

go back;

}

unravel({ ...fields, ...information });

});

});

}This offers us with a at hand serve as to parse multipart/form-data the use of guarantees and get entry to the request’s common type fields, in addition to details about the information that had been written to disk the use of streams.

Now, we will be able to read about the request frame:

export default defineEventHandler((match) => {

const nodeRequestObject = match.node.req;

const frame = look forward to doSomethingWithNodeRequest(match.node.req);

console.log(frame)

go back { good enough: true };

});We will have to see an object containing all of the type fields and their values, however for each and every dossier enter, we’ll see an object that represents the uploaded dossier, and no longer the dossier itself. This object incorporates all forms of helpful knowledge together with its trail on disk, call, mimetype, and extra.

{

file-input-name: PersistentFile {

_events: [Object: null prototype] { error: [Function (anonymous)] },

_eventsCount: 1,

_maxListeners: undefined,

lastModifiedDate: 2023-03-21T22:57:42.332Z,

filepath: '/tmp/d53a9fd346fcc1122e6746600',

newFilename: 'd53a9fd346fcc1122e6746600',

originalFilename: 'dossier.txt',

mimetype: 'textual content/undeniable',

hashAlgorithm: false,

dimension: 13,

_writeStream: WriteStream {

fd: null,

trail: '/tmp/d53a9fd346fcc1122e6746600',

flags: 'w',

mode: 438,

get started: undefined,

pos: undefined,

bytesWritten: 13,

_writableState: [WritableState],

_events: [Object: null prototype],

_eventsCount: 1,

_maxListeners: undefined,

[Symbol(kFs)]: [Object],

[Symbol(kIsPerformingIO)]: false,

[Symbol(kCapture)]: false

},

hash: null,

[Symbol(kCapture)]: false

}

}You’ll additionally realize that the newFilename is a hashed price. That is to be sure that if two information are uploaded with the similar call, you are going to no longer lose information. You’ll, after all, alter how information are written to disk.

Be aware that during a regular utility, it’s a good suggestion to retailer a few of this knowledge in a continual position, like a database, so you’ll be able to simply to find all of the information which have been uploaded. However that’s no longer the purpose of this submit.

Now there’s yet another factor I need to repair. I simplest need to procedure multipart/form-data requests with ambitious. The whole lot else may also be treated by means of a integrated frame parser like the only we noticed above.

So I’ll create a “frame” variable first, then take a look at the request headers, and assign the price of the frame in line with the “Content material-Sort”. I’ll additionally rename my serve as to parseMultipartNodeRequest to be extra specific about what it does.

Right here’s what the entire thing seems like (word that getRequestHeaders is some other integrated Nuxt serve as):

import ambitious from 'ambitious';

/**

* @see https://nuxt.com/doctors/information/ideas/server-engine

* @see https://github.com/unjs/h3

*/

export default defineEventHandler(async (match) => {

let frame;

const headers = getRequestHeaders(match);

if (headers['content-type']?.contains('multipart/form-data')) {

frame = look forward to parseMultipartNodeRequest(match.node.req);

} else {

frame = look forward to readBody(match);

}

console.log(frame);

go back { good enough: true };

});

/**

* @param {import('http').IncomingMessage} req

*/

serve as parseMultipartNodeRequest(req) {

go back new Promise((unravel, reject) => {

/** @see https://github.com/node-formidable/ambitious/ */

const type = ambitious({ multiples: true })

type.parse(req, (error, fields, information) => {

if (error) {

reject(error);

go back;

}

unravel({ ...fields, ...information });

});

});

}This manner, we’ve an API this is powerful sufficient to simply accept multipart/form-data, undeniable textual content, or URL-encoded requests.

📯📯📯 Completing up

There’s no emoji rave horn, so the ones should do. We coated more or less so much, so let’s do some recap.

After we add a dossier the use of a multipart/form-data request, the browser will ship the knowledge one bite at a time, the use of a circulation. That’s as a result of we will be able to’t put all of the dossier within the request object immediately.

In Node.js, we will be able to concentrate to the request’s “information” match to paintings with each and every bite of knowledge because it arrives. This provides us get entry to to the request circulation.

Even though shall we seize all of that information and retailer it in reminiscence, that’s a foul concept as a result of a big dossier add may eat all of the server’s reminiscence, inflicting it to crash.

As a substitute, we will be able to pipe that circulation in other places, so each and every bite is won, processed, then launched from reminiscence. One choice is to make use of fs.createWriteStream to create a WritableStream that may write to the dossier machine.

As a substitute of writing our personal low-level parser, we will have to use a device like ambitious. However we want to verify that the knowledge is coming from a multipart/form-data request. Another way, we will be able to use a regular frame parser.

We coated numerous low-level ideas, and landed on a high-level answer. Optimistically, all of it made sense and also you discovered this convenient.

When you have any questions or if one thing used to be complicated, please pass forward and achieve out to me. I’m at all times glad to assist.

I’m having numerous a laugh operating in this collection, and I’m hoping you might be playing it as neatly. Stick round for the remainder of it 😀

- Add information with HTML

- Add information with JavaScript

- Obtain uploads in Node.js (Nuxt.js)

- Optimize garage prices with Object Garage

- Optimize efficiency with a CDN

- Add safety & malware coverage

Thanks such a lot for studying. Should you appreciated this text, and need to strengthen me, the most productive techniques to take action are to percentage it, join my publication, and observe me on Twitter.

Firstly revealed on austingil.com.

[ad_2]