[ad_1]

That is the fourth put up in a chain all about importing recordsdata for the internet. Within the earlier posts, we lined importing recordsdata the usage of simply HTML, importing recordsdata the usage of JavaScript, and methods to obtain record uploads on a Node.js server.

- Add recordsdata with HTML

- Add recordsdata with JavaScript

- Obtain uploads in Node.js (Nuxt.js)

- Optimize garage prices with Object Garage

- Optimize efficiency with a CDN

- Add safety & malware coverage

This put up goes to take a step again and discover architectural adjustments to scale back prices when including record uploads to our packages.

By way of this level, we will have to be receiving multipart/form-data in Node.js, parsing the request, taking pictures the record, and writing that record to the disk at the utility server.

There are a few problems with this way.

First, this way doesn’t paintings for dispensed methods that depend on a number of other machines. If a person uploads a record it may be not easy (or inconceivable) to grasp which device gained the request, and due to this fact, the place the record is stored. That is very true when you’re the usage of serverless or edge compute.

Secondly, storing uploads at the utility server may cause the server to briefly run out of disk area. At which level, we’d must improve our server. Which may be a lot more pricey than different cost-effective answers.

And that’s the place Object Garage is available in.

What’s Object Garage

You’ll be able to bring to mind Object Garage like a folder on a pc. You’ll be able to put any recordsdata (aka “items”) you need in it, however the folders (aka “buckets”) reside inside a cloud provider supplier. You’ll be able to additionally get admission to recordsdata by means of URL.

Object Garage supplies a few advantages:

- It’s a unmarried, central position to retailer and get admission to your entire uploads.

- It’s designed to be extremely to be had, simply scalable, and tremendous cost-effective.

As an example, when you believe shared CPU servers, it is advisable run an utility for $5/month and get 25 GB of disk area. In case your server begins operating out of area, it is advisable improve your server to get an extra 25 GB, however that’s going to price you $7/month extra.

On the other hand, it is advisable put that cash in opposition to Object Garage and you could possibly get 250 GB for $5/month. So 10 instances extra space for storing for much less charge.

In fact, there are different causes to improve your utility server. You might want extra RAM or CPU, but when we’re speaking purely about disk area, Object Garage is a far less expensive answer.

With that during thoughts, the remainder of this article is going to duvet connecting an current Node.js utility to an Object Garage supplier. We’ll use bold to parse multipart requests, however configure it to add recordsdata to Object Garage as a substitute of writing to disk.

If you wish to apply alongside, it is very important have an Object Garage bucket arrange, in addition to the get admission to keys. Any S3-compatible Object Garage supplier will have to paintings. These days, I’ll be the usage of Akamai’s cloud computing products and services (previously Linode). If you wish to do the similar, right here’s a information that displays you methods to get going: https://www.linode.com/medical doctors/merchandise/garage/object-storage/get-started/

And right here’s a hyperlink to get $100 in loose credit for 60 days.

What’s S3

Sooner than we commence writing code, there’s another idea that I will have to provide an explanation for, S3. S3 stands for “Easy Garage Carrier”, and it’s an Object Garage product at first evolved at AWS.

In conjunction with their product, AWS got here up with an ordinary conversation protocol for interacting with their Object Garage answer.

As extra corporations began providing Object Garage products and services, they determined to additionally undertake the similar S3 conversation protocol for his or her Object Garage provider, and S3 turned into an ordinary.

Because of this, we’ve got extra choices to make a choice from for Object Garage suppliers and less choices to dig via for tooling. We will be able to use the similar libraries (maintained via AWS) with different suppliers. That’s nice information as it way the code we write as of late will have to paintings throughout any S3-compatible provider.

The libraries we’ll use as of late are @aws-sdk/client-s3 and @aws-sdk/lib-storage:

npm set up @aws-sdk/client-s3 @aws-sdk/lib-storage

Those libraries will lend a hand us add items into our buckets.

Ok, let’s write some code!

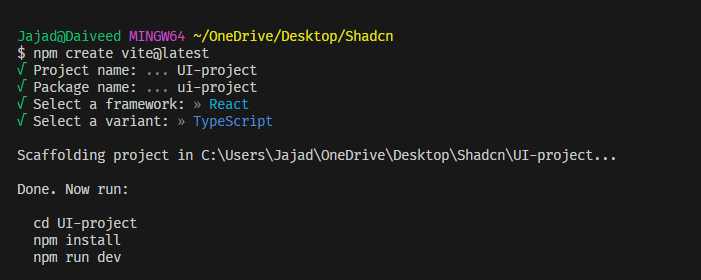

Get started with current Node.js utility

We’ll get started with an instance Nuxt.js tournament handler that writes recordsdata to disk the usage of bold. It assessments if a request comprises multipart/form-data and if that is so, it passes the underlying Node.js request object (aka IncomingMessage) to a customized serve as parseMultipartNodeRequest. Since this serve as makes use of the Node.js request, it’s going to paintings in any Node.js surroundings and gear like bold.

import bold from 'bold';

/* world defineEventHandler, getRequestHeaders, readBody */

/**

* @see https://nuxt.com/medical doctors/information/ideas/server-engine

* @see https://github.com/unjs/h3

*/

export default defineEventHandler(async (tournament) => {

let frame;

const headers = getRequestHeaders(tournament);

if (headers['content-type']?.contains('multipart/form-data')) {

frame = wait for parseMultipartNodeRequest(tournament.node.req);

} else {

frame = wait for readBody(tournament);

}

console.log(frame);

go back { good enough: true };

});

/**

* @param {import('http').IncomingMessage} req

*/

serve as parseMultipartNodeRequest(req) {

go back new Promise((unravel, reject) => {

const variety = bold({ multiples: true });

variety.parse(req, (error, fields, recordsdata) => {

if (error) {

reject(error);

go back;

}

unravel({ ...fields, ...recordsdata });

});

});

}We’re going to change this code to ship the recordsdata to an S3 bucket as a substitute of writing them to disk.

Arrange S3 Shopper

The very first thing we want to do is about up an S3 Shopper to make the add requests for us, so we don’t have to jot down them manually. We’ll import the S3Client constructor from @aws-sdk/client-s3 in addition to the Add command from @aws-sdk/lib-storage. We’ll additionally import Node’s movement module to make use of in a while.

import movement from 'node:movement';

import { S3Client } from '@aws-sdk/client-s3';

import { Add } from '@aws-sdk/lib-storage';

Subsequent, we want to configure our Jstomer the usage of our S3 bucket endpoint, get admission to key, secret get admission to key, and area. Once more, you will have to have already got arrange an S3 bucket and know the place to seek out this knowledge. If no longer, take a look at this information ($100 credit score).

I love to retailer this knowledge in surroundings variables and no longer hard-code the configuration into the supply code. We will be able to get admission to the ones variables the usage of procedure.env to make use of in our utility.

const { S3_URL, S3_ACCESS_KEY, S3_SECRET_KEY, S3_REGION } = procedure.env;

When you’ve by no means used surroundings variables, they’re a just right position for us to position secret data corresponding to get admission to credentials. You’ll be able to learn extra about them right here: https://nodejs.dev/en/be told/how-to-read-environment-variables-from-nodejs/

With our variables arrange, I will now instantiate the S3 Shopper we’ll use to be in contact to our bucket.

const s3Client = new S3Client({

endpoint: `https://${S3_URL}`,

credentials: {

accessKeyId: S3_ACCESS_KEY,

secretAccessKey: S3_SECRET_KEY,

},

area: S3_REGION,

});It’s price mentioning that the endpoint wishes to incorporate the HTTPS protocol. In Akamai’s Object Garage dashboard, whilst you reproduction the bucket URL, nevertheless it doesn’t come with the protocol (bucket-name.bucket-region.linodeobjects.com). So I simply upload the prefix right here.

With our S3 Jstomer configured, we will be able to get started the usage of it.

Regulate bold

In our utility, we’re passing any multipart Node request into our customized serve as, parseMultipartNodeRequest. This serve as returns a Promise and passes the request to bold, which parses the request, writes recordsdata to the disk, and resolves the promise with the shape fields information and recordsdata information.

serve as parseMultipartNodeRequest(req) {

go back new Promise((unravel, reject) => {

const variety = bold({ multiples: true });

variety.parse(req, (error, fields, recordsdata) => {

if (error) {

reject(error);

go back;

}

unravel({ ...fields, ...recordsdata });

});

});

}

That is the section that should trade. As a substitute of processing the request and writing recordsdata to disk, we wish to pipe record streams to an S3 add request. In order each and every record chew is gained, it’s handed via our handler to the S3 add.

We’ll nonetheless go back a promise and use bold to parse the shape, however we need to trade bold’s configuration choices. We’ll set the fileWriteStreamHandler approach to a serve as known as fileWriteStreamHandler that we’ll write in a while.

/** @param {import('bold').Record} record */

serve as fileWriteStreamHandler(record) {

// TODO

}

const variety = bold({

multiples: true,

fileWriteStreamHandler: fileWriteStreamHandler,

});

Right here’s what their documentation says about fileWriteStreamHandler:

choices.fileWriteStreamHandler{serve as} – defaultnull, which via default writes to host device record machine each record parsed; The serve as will have to go back an example of a Writable movement that can obtain the uploaded record information. With this feature, you’ll have any customized habits referring to the place the uploaded record information might be streamed for. In case you are taking a look to jot down the record uploaded in different varieties of cloud storages (AWS S3, Azure blob garage, Google cloud garage) or non-public record garage, that is the choice you’re searching for. When this feature is outlined the default habits of writing the record within the host device record machine is misplaced.

As bold parses each and every chew of information from the request, it’s going to pipe that chew into the Writable movement that’s returned from this serve as. So our fileWriteStreamHandler serve as is the place the magic occurs.

Sooner than we write the code, let’s perceive some issues:

- This serve as will have to go back a Writable movement to jot down each and every add chew to.

- It additionally must pipe each and every chew of information to an S3 Object Garage.

- We will be able to use the

Addcommand from@aws-sdk/lib-storageto create the request. - The request frame generally is a movement, nevertheless it will have to be a Readable movement, no longer a Writable movement.

- A Passthrough movement can be utilized as each a Readable and Writable movement.

- Each and every request bold will parse would possibly include a couple of recordsdata, so we would possibly want to observe a couple of S3 add requests.

fileWriteStreamHandlerreceives one parameter of kindbold.Recordinterface with homes likeoriginalFilename,measurement,mimetype, and extra.

OK, now let’s write the code. We’ll get started with an Array to retailer and observe the entire S3 add request out of doors the scope of fileWriteStreamHandler. Inside of fileWriteStreamHandler, we’ll create the Passthrough movement that can function each the Readable frame of the S3 add and the Writable go back worth of this serve as. We’ll create the Add request the usage of the S3 libraries, and inform it our bucket call, the article key (which is able to come with folders), the article Content material-Kind, the Get admission to Keep an eye on Stage for this object, and the Passthrough movement because the request frame. We’ll instantiate the request the usage of Add.performed() and upload the returned Promise to our monitoring Array. We may wish to upload the reaction Location belongings to the record object when the add completes, so we will be able to use that data in a while. Finally, we’ll go back the Passthrough movement from this serve as:

/** @kind {Promise<any>[]} */

const s3Uploads = [];

/** @param {import('bold').Record} record */

serve as fileWriteStreamHandler(record) {

const frame = new movement.PassThrough();

const add = new Add({

Jstomer: s3Client,

params: {

Bucket: 'austins-bucket',

Key: `recordsdata/${record.originalFilename}`,

ContentType: record.mimetype,

ACL: 'public-read',

Frame: frame,

},

});

const uploadRequest = add.performed().then((reaction) => {

record.location = reaction.Location;

});

s3Uploads.push(uploadRequest);

go back frame;

}A few issues to notice:

Keyis the call and site the article will exist. It could actually come with folders that might be created if they don’t lately exist. If a record exists with the similar call and site, it’s going to be overwritten (high quality for me as of late). You’ll be able to keep away from collisions via the usage of hashed names or timestamps.ContentTypeisn’t required, nevertheless it’s useful to incorporate. It permits browsers to create the downloaded reaction as it should be in line with Content material-Kind.ACL: could also be not obligatory, however via default, each object is non-public. If you need other folks with the intention to get admission to the recordsdata by means of URL (like an<img>component), you’ll wish to make it public.- Despite the fact that

@aws-sdk/client-s3helps uploads, you wish to have@aws-sdk/lib-storageto beef up Readable streams. - You’ll be able to learn extra concerning the parameters on NPM: https://www.npmjs.com/bundle/@aws-sdk/client-s3

This manner, bold turns into the plumbing that connects the incoming Jstomer request to the S3 add request.

Now there’s only one extra trade to make. We’re keeping an eye on the entire add requests, however we aren’t looking ahead to them to complete.

We will be able to repair that via editing the parseMultipartNodeRequest serve as. It will have to proceed to make use of bold to parse the customer request, however as a substitute of resolving the promise straight away, we will be able to use Promise.all to attend till the entire add requests have resolved.

The entire serve as seems like this:

/**

* @param {import('http').IncomingMessage} req

*/

serve as parseMultipartNodeRequest(req) {

go back new Promise((unravel, reject) => {

/** @kind {Promise<any>[]} */

const s3Uploads = [];

/** @param {import('bold').Record} record */

serve as fileWriteStreamHandler(record) {

const frame = new PassThrough();

const add = new Add({

Jstomer: s3Client,

params: {

Bucket: 'austins-bucket',

Key: `recordsdata/${record.originalFilename}`,

ContentType: record.mimetype,

ACL: 'public-read',

Frame: frame,

},

});

const uploadRequest = add.performed().then((reaction) => {

record.location = reaction.Location;

});

s3Uploads.push(uploadRequest);

go back frame;

}

const variety = bold({

multiples: true,

fileWriteStreamHandler: fileWriteStreamHandler,

});

variety.parse(req, (error, fields, recordsdata) => {

if (error) {

reject(error);

go back;

}

Promise.all(s3Uploads)

.then(() => {

unravel({ ...fields, ...recordsdata });

})

.catch(reject);

});

});

}The resolved recordsdata worth can even include the location belongings we incorporated, pointing to the Object Garage URL.

Stroll via the entire waft

We lined so much, and I believe it’s a good suggestion to check how the entirety works in combination. If we glance again on the authentic tournament handler, we will be able to see that any multipart/form-data request might be gained and handed to our parseMultipartNodeRequest serve as. The resolved worth from this serve as might be logged to the console:

export default defineEventHandler(async (tournament) => {

let frame;

const headers = getRequestHeaders(tournament);

if (headers['content-type']?.contains('multipart/form-data')) {

frame = wait for parseMultipartNodeRequest(tournament.node.req);

} else {

frame = wait for readBody(tournament);

}

console.log(frame);

go back { good enough: true };

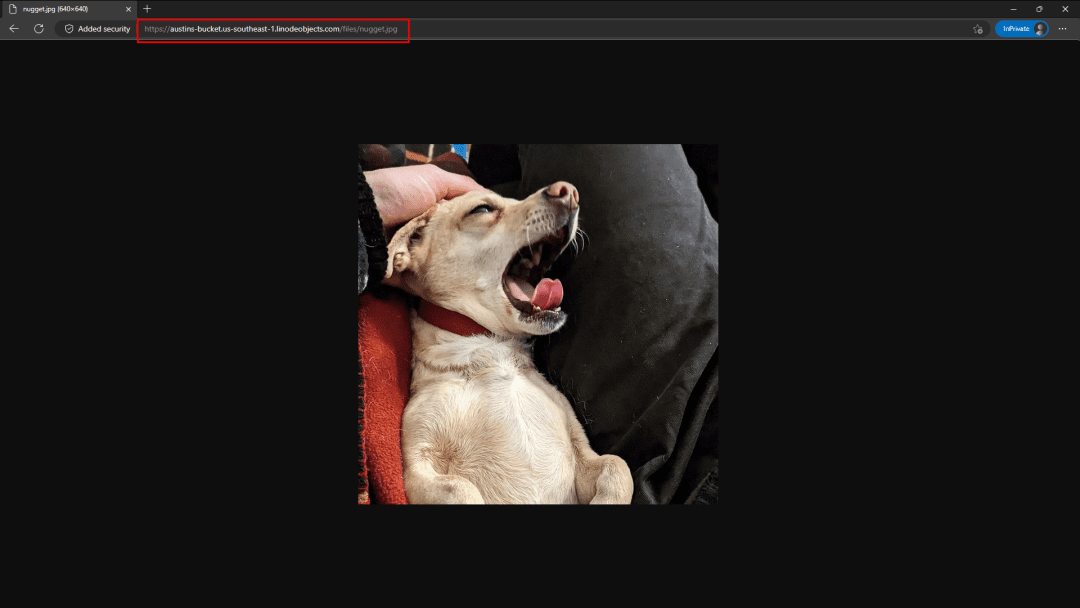

});With that during thoughts, let’s spoil down what occurs if I wish to add a lovable photograph of Nugget making a large ol’ yawn.

- For the browser to ship the record as binary information, it must make a

multiplart/form-datarequest with an HTML variety or with JavaScript. - Our Nuxt.js utility receives the

multipart/form-dataand passes the underlying Node.js request object to our customizedparseMultipartNodeRequestserve as. parseMultipartNodeRequestreturns aPromisethat can in the end be resolved with the information. Inside of thatPromise, we instantiate the bold library and cross the request object to bold for parsing.- As bold is parsing the request when it comes throughout a record, it writes the chunks of information from the record movement to the

Passthroughmovement that’s returned from thefileWriteStreamHandlerserve as. - Within the

fileWriteStreamHandlerwe additionally arrange a request to add the record to our S3-compatible bucket, and we use the similarPassthroughmovement because the frame of the request. In order bold writes chunks of record information to thePassthroughmovement, they’re additionally learn via the S3 add request. - As soon as bold has completed parsing the request, the entire chunks of information from the record streams are sorted, and we look ahead to the listing of S3 requests to complete importing.

- Finally this is performed, we unravel the

PromisefromparseMultipartNodeRequestwith the changed information from bold. Theframevariable is assigned to the resolved worth. - The knowledge representing the fields and recordsdata (no longer the recordsdata themselves) are logged to the console.

So now, if our authentic add request contained a unmarried box known as “file1” with the photograph of Nugget, we may see one thing like this:

{

file1: {

_events: [Object: null prototype] { error: [Function (anonymous)] },

_eventsCount: 1,

_maxListeners: undefined,

lastModifiedDate: null,

filepath: '/tmp/93374f13c6cab7a01f7cb5100',

newFilename: '93374f13c6cab7a01f7cb5100',

originalFilename: 'nugget.jpg',

mimetype: 'symbol/jpeg',

hashAlgorithm: false,

createFileWriteStream: [Function: fileWriteStreamHandler],

measurement: 82298,

_writeStream: PassThrough {

_readableState: [ReadableState],

_events: [Object: null prototype],

_eventsCount: 6,

_maxListeners: undefined,

_writableState: [WritableState],

allowHalfOpen: true,

[Symbol(kCapture)]: false,

[Symbol(kCallback)]: null

},

hash: null,

location: 'https://austins-bucket.us-southeast-1.linodeobjects.com/recordsdata/nugget.jpg',

[Symbol(kCapture)]: false

}

}It appears to be like similar to the article bold returns when it writes without delay to disk, however this time it has an additional belongings, location, which is the Object Garage URL for our uploaded record.

Throw that sucker to your browser and what do you get?

That’s proper! A lovable photograph of Nugget making a large ol’ yawn 🥰

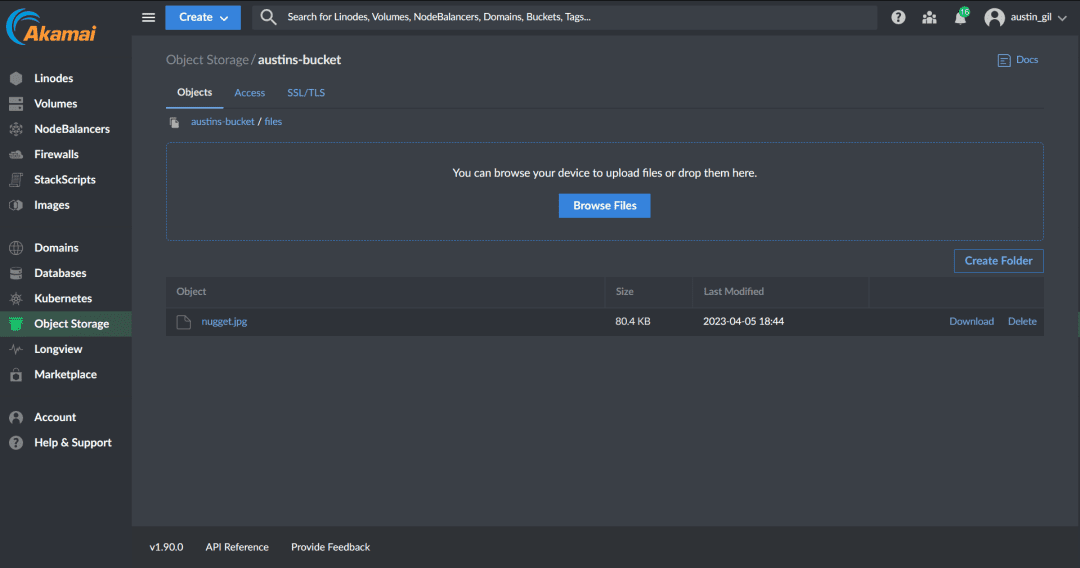

I will additionally cross to my bucket in my Object Garage dashboard and notice that I now have a folder known as “recordsdata” containing a record known as “nugget.jpg”.

Caveats

I’d be remiss if I didn’t point out the next. (If truth be told, I used to be remiss as a result of I didn’t point out it till after somebody pointed it out within the feedback 🤣)

Streaming uploads via your backend to Object Garage isn’t the one strategy to add recordsdata to S3. You’ll be able to additionally use signed URLs. Signed URLs are principally the similar URL within the bucket the place the record will reside, however they come with an authentication signature that can be utilized via somebody to add a record, so long as the signature has no longer expired (typically reasonably quickly).

Right here’s how the waft in most cases works:

- Frontend makes a request to the backend for a signed URL.

- Backend makes an authenticated request to the Object Garage supplier for a signed URL with a given expiry.

- Object Garage supplier supplies a signed URL to the backend.

- Backend returns the signed URL to the frontend.

- Frontend uploads the record without delay to Object Garage due to the signed URL.

- Not obligatory: Frontend would possibly make every other request to the Backend if you wish to have to replace a database that the add finished.

This waft calls for just a little extra choreography than Frontend -> Backend -> Object Garage, nevertheless it has some advantages.

- It strikes paintings off your servers, which is able to cut back load and strengthen efficiency.

- It strikes the record add bandwidth off your server. When you pay for ingress and feature a number of massive record uploads always, this is able to upload up.

It additionally comes with its personal prices.

- You will have a lot much less keep watch over over what customers can add. This may come with malware.

- If you wish to have to accomplish purposes at the recordsdata like optimizing, you’ll’t do this with signed URLs.

- The advanced waft makes it a lot tougher to construct an add waft with modern enhancement in thoughts.

As with maximum issues in internet building, there isn’t one proper answer. It is going to in large part rely on your use case. I really like going via my backend, so I’ve extra keep watch over over the recordsdata and I will simplify the frontend.

I sought after to proportion this streaming choice, in large part as a result of there may be infrequently any content material in the market about streaming. Maximum content material makes use of signed URLs (perhaps I’m lacking one thing). When you’d like to be informed extra about the usage of signed URLs, right here is a few documentation and right here’s a to hand instructional via Mary Gathoni.

Final ideas

Ok, we lined so much as of late. I am hoping all of it made sense. If no longer, be at liberty to succeed in out to me with questions. Additionally, achieve out and let me know if you were given it running to your personal utility.

I’d love to listen to from you as a result of the usage of Object Garage is a wonderful architectural determination if you wish to have a unmarried, cost-effective position to retailer recordsdata.

Within the subsequent posts, we’ll paintings on making our packages ship recordsdata sooner, in addition to protective our packages from malicious uploads.

- Add recordsdata with HTML

- Add recordsdata with JavaScript

- Obtain uploads in Node.js (Nuxt.js)

- Optimize garage prices with Object Garage

- Optimize efficiency with a CDN

- Add safety & malware coverage

I am hoping you stick round.

Thanks such a lot for studying. When you favored this text, and wish to beef up me, the most productive techniques to take action are to proportion it, join my publication, and apply me on Twitter.

At the beginning printed on austingil.com.

[ad_2]